Chance News 97: Difference between revisions

m (→Forsooth) |

|||

| (31 intermediate revisions by 2 users not shown) | |||

| Line 1: | Line 1: | ||

December 21, 2013 to February 20, 2014 | |||

==Quotations== | ==Quotations== | ||

<blockquote> | <blockquote> | ||

| Line 37: | Line 39: | ||

"The more you are cited the better you are. It is self-evident. QED. But is it? Some academic papers are cited to be debunked." | "The more you are cited the better you are. It is self-evident. QED. But is it? Some academic papers are cited to be debunked." | ||

<div align=right><i>Significance</i>, December 2013</div> | <div align=right><i>Significance</i>, December 2013</div> | ||

Submitted by Margaret Cibes | |||

---- | |||

"There is a story about two friends, who were classmates in high school, talking about their jobs. One of them became a statistician and was working on population trends. He showed a reprint to his former classmate. The reprint started, as usual, with the Gaussian distribution and the statistician explained to his former classmate the meaning of the symbols for the actual population, for the average population, and so on. His classmate was a bit incredulous and was not quite sure whether the statistician was pulling his leg. "How can you know that?" was his query. "And what is this symbol here?" "Oh," said the statistician, "this is pi." "What is that?" "The ratio of the circumference of the circle to its diameter." "Well, now you are pushing your joke too far," said the classmate, "surely the population has nothing to do with the circumference of the circle." | |||

<div align=right>Eugene Wigner in [http://www.dartmouth.edu/~matc/MathDrama/reading/Wigner.html "The Unreasonable Effectiveness of Mathematics in the Natural Sciences"]<br> | |||

<i>Communications in Pure and Applied Mathematics</i>, February 1960</div> | |||

Submitted by Margaret Cibes | Submitted by Margaret Cibes | ||

| Line 42: | Line 50: | ||

:[[File:TN_BreastCancer.png ]] | :[[File:TN_BreastCancer.png ]] | ||

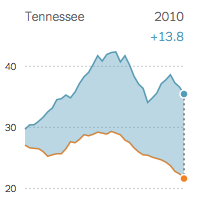

“The difference in mortality rates [in deaths per 100,000 women] between black women [top curve] and white women [bottom curve] with breast cancer has widened since 1975, in part because black women have not benefited as much from improvements in screening and treatment. Among the states with available data, Tennessee has the largest gap—'''with nearly 14 black women dying for every one white woman'''.” | “The difference in mortality rates [in deaths per 100,000 women] between black women [top curve] and white women [bottom curve] with breast cancer has widened since 1975, in part because black women have not benefited as much from improvements in screening and treatment. Among the states with available data, Tennessee has the largest gap—'''with nearly 14 black women dying for every one white woman'''.” [emphasis added] | ||

<div align=right>in: [http://www.nytimes.com/interactive/2013/12/20/health/a-racial-gap-in-breast-cancer-deaths.html?_r=0 A stark gap in breast cancer deaths] | <div align=right>in: [http://www.nytimes.com/interactive/2013/12/20/health/a-racial-gap-in-breast-cancer-deaths.html?_r=0 A stark gap in breast cancer deaths] | ||

(interactive graphic), ''New York Times'', 20 December 2013</div> | (interactive graphic), ''New York Times'', 20 December 2013</div> | ||

| Line 63: | Line 71: | ||

Submitted by Bill Peterson | Submitted by Bill Peterson | ||

---- | |||

<i>The New York Times</i> has printed two alternative covers of the <i>Sunday Times</i> magazine, for random distribution to subscribers around the country. That explains a headline that appeared in the week preceding the Super Bowl: [http://6thfloor.blogs.nytimes.com/2014/01/31/this-weeks-cover-accurately-predicts-the-super-bowl-winner-half-of-the-time/?_php=true&_type=blogs&_r=0 "This Week's Cover Accurately Predicts the Super Bowl Winner (Half of the Time)"] | |||

<div align=right><i>The New York Times</i>, January 31, 2014</div> | |||

Submitted by James Greenwood | |||

---- | |||

"Oh, aren't people stupid! Do you know the average IQ is only 100? That's terribly low, isn't it? One hundred. It's no wonder the world's in such a mess." | |||

<div align=right>Patient quoted by Oliver Sacks, in "The Abyss"<br> | |||

<i>The New Yorker</i>, September 24, 2007, p. 103</div> | |||

Submitted by Margaret Cibes | |||

==Sham knee surgery study== | ==Sham knee surgery study== | ||

| Line 83: | Line 104: | ||

===Discussion=== | ===Discussion=== | ||

1. What particular concerns might you have with sham surgical treatments | 1. We read in the WSJ, "Similar to the practice of giving some patients sugar pills or placebos in drug studies, simulated surgery is among the most rigorous ways to evaluate the effectiveness of surgical procedures." What particular concerns might you have with sham surgical treatments as opposed to less invasive placebo treatments like sugar pills? For more on this, see [http://www.ncbi.nlm.nih.gov/pmc/articles/PMC1079669/ Sham procedures and the ethics of clinical trials] (''Journal of the Royal Society of Medicine,'' December 2004). | ||

2. The ''WSJ'' graphic cites [http://clinicaltrials.gov/ ClinicalTrials.gov], which | 2. The ''WSJ'' graphic cites [http://clinicaltrials.gov/ ClinicalTrials.gov], which maintains "a registry and results database of publicly and privately supported clinical studies of human participants conducted around the world." On that web site we find the following data on [http://clinicaltrials.gov/ct2/resources/trends#RegisteredStudiesOverTime trends over time] | ||

<center> | <center> | ||

| Line 115: | Line 136: | ||

|} | |} | ||

</center> | </center> | ||

How do these figures affect | How do these figures affect your impression of the ''WSJ'' graph? | ||

Submitted by Bill Peterson | Submitted by Bill Peterson | ||

| Line 212: | Line 233: | ||

Submitted by Paul Alper | Submitted by Paul Alper | ||

===How to read a map=== | |||

[http://www.directionsmag.com/articles/on-how-to-read-a-good-map/369257 On how to read a (good) map]<br> | |||

by Dr. Ian Muehlenhaus, ''Directions Magazine'', 27 January 2014 | |||

''Directions'' is an industry magazine covering trends in "geospatial information technology." Dr. Muehlenhaus, who contributed this article, teaches of geography and cartography at the University of Wisconsin-La Crosse. He writes, "Just as you shouldn’t trust everything you read or see on television, you should never blindly trust information just because it is on a map." Replace ''map'' with ''statistical study'' and the same advice applies. | |||

Muehlenhaus goes on to give the following questions to consider when evaluating a map: | |||

#Who made the map? | |||

#What is the purpose of the map? That is, what is the map attempting to communicate? | |||

#Who is the intended audience? (It is important to remember that the map may not have been designed for you, but a more specialized audience.) | |||

#Does the map effectively achieve its communication goals? Does it present an interesting story or argument? | |||

As an example of a good map, the article gives an extended discussion of a NASA map of last fall's Yosemite Rim Fire in California. | |||

Submitted by Bill Peterson | |||

==Warren Buffett's billion dollar gamble== | |||

In a [http://www.quickenloans.com/blog/quicken-loans-billion-dollar-bracket-challenge widely publicized announcement] on January 21, 2014, Quicken Loans is offering a billion (sic) dollar prize to any contestant who can fill out the bracket perfectly in the March NCAA basketball tournament. Because they don't have a spare billion in the bank, they have insured against the possibility of a winner with Berkshire Hathaway (BH), paying an undisclosed premium believed to be around 10 million dollars. Is this is good deal for BH? | |||

Some relevant data: to win you must predict all 63 game winners correctly. The number of entries is limited to 10 million. The prize is actually 500 million cash (or 1 billion over 40 years). Presumably Warren Buffett asked his actuary "are you very confident that the chance of someone winning is considerably less than 1/50". How would you have answered? | |||

I put this forward as an interesting topic for open-ended classroom discussion. First emphasize that the naive model (each entry has chance 1 in 2<sup>63</sup> to win) is ridiculous. Then elicit the notions that a better model might involve some combination of | |||

*modeling typical probabilities for individual games | |||

*modeling the strategies used by contestants | |||

*empirical data from similar past forecasting tournaments. | |||

Here are two of many possible lines of thought. | |||

(1) The arithmetic | |||

<center> | |||

(5 million) × (3/4)<sup>63</sup> ≈ 1/14 | |||

</center> | |||

suggests that if half the contestants are able to consistently predict game winners with chance 3/4, then it's a bad deal for BH. Fortunately for BH this scenario seems inconsistent with past data. Because the same calculation, applied to entries in a similar (but only 1 million dollar prize) ESPN contest last year, says that about | |||

<center> | |||

(4 million) × (3/4)<sup>32</sup> ≈ 1/14 | |||

</center> | |||

entries should have predicted all 32 first-round games correctly. But none did (5 people got 30 out of 32 correct). | |||

(2) The optimal strategy, as intuition suggests, is to predict the winner of each game to be the team you think (from personal opinion or external authority) more likely to win. For various reasons, not every contestant does this. For instance, as an aspect of a general phenomenon psychologists call [http://en.wikipedia.org/wiki/Probability_matching probability matching], a contestant might think that because some proportion of games are won by the underdog, they should bet on the underdog that proportion of times. And there are other reasons (supporting a particular team; personal opinions about the abilities of a subset of teams) why a contestant might predict the higher ranked team in most, but not all, games. So let us imagine, as a purely hypothetical scenario, that each contestant predicts the higher-ranked team to win in all except ''k'' randomly-picked games. Then the chance that someone wins the prize is about | |||

<center> | |||

Pr(in fact exactly ''k'' games won by underdog) × [(10 million) / <math>\tbinom{63}{k} </math> ] | |||

</center> | |||

provided the bracketed term is ≪1. This term is ≈ 0.15 for ''k'' = 6 and ≈ 0.02 for ''k'' = 7. The first term cannot be guessed -- as a student project one could get data from past tournaments to estimate it -- but is surely quite small for ''k'' = 6 or 7. This suggests a worst-case hypothetical scenario from BH's viewpoint: that an unusually small number of games are won by the underdog, and that a large proportion of contestants forecast that most games are won by the higher-ranked team. But even in this worst case it seems difficult to imagine the chance of a winner becoming anywhere close to 1/50. | |||

'''Other estimates''' | |||

A brief search for other estimates of the chance that an individual skilled forecaster could win the prize finds | |||

*Jeff Bergen of DePaul University asserts [http://www.youtube.com/watch?v=O6Smkv11Mj4in this youtube video] a 1 in 128 billion chance. | |||

*Ezra Miller of Duke University [http://www.latimes.com/business/la-fi-buffett-basketball-bet-20140122,0,7653962.story#axzz2r88PpgCI is quoted as saying] a 1 in 1 billion chance. | |||

Neither source explains how these chances were calculated. | |||

Submitted by David Aldous (reproduced from a blog post [http://www.stat.berkeley.edu/~aldous/Blog/warren_buffett.html here]). | |||

==Confusing dietary advice== | |||

[http://www.nytimes.com/2014/02/09/opinion/sunday/why-nutrition-is-so-confusing.html?hp&rref=opinion Why nutrition is so confusing]<br> | |||

by Gary Taubes, ''New York Times'', 8 February 2014 | |||

Taubes reports that in 1960, fewer than 13 percent of Americans were obese and only 1 percent were diagnosed as diabetic. At the time, there were fewer than 1100 articles on obesity or diabetes in the indexed medical literature. In the years since then, the obesity percentage has increased by a factor of 3, the diabetes percentage by a factor of 7. And more than 600,000 research articles on these topics have now appeared in the literature. | |||

For a satirical "association is not causation" example, one might suggest that publishing articles on obesity is a public health menace, since it leads to more weight problems in the population. Actually, Taubes's essay takes a more serious stab at the problem. Because heart disease and diabetes unfold on such long time-scales, controlled experiments can be prohibitively expensive if not impossible. Instead, we have observational studies, that can only suggest how various dietary factors might affect health outcomes. | |||

Taubes writes that: | |||

<blockquote> | |||

The associations that emerge from these studies used to be known as “hypothesis-generating data,” based on the fact that an association tells us only that two things changed together in time, not that one caused the other. So associations generate hypotheses of causality that then have to be tested. But this hypothesis-generating caveat has been dropped over the years as researchers studying nutrition have decided that this is the best they can do. | |||

</blockquote> | |||

Thus, | |||

<blockquote> | |||

...we have a field of sort-of-science in which hypotheses are treated as facts because they’re too hard or expensive to test, and there are so many hypotheses that what journalists like to call “leading authorities” disagree with one another daily. | |||

</blockquote> | |||

He cites Karl Popper: “The method of science as the method of bold conjectures and ingenious and severe attempts to refute them.” In this formulation, Taubes concludes that research on nutrition is out of balance. It is good at generating conjectures, but in need of more vigorous efforts at refuting them. | |||

Submitted by Bill Peterson | |||

Latest revision as of 01:10, 16 April 2014

December 21, 2013 to February 20, 2014

Quotations

I beseech you, in the bowels of Christ, think it possible that you may be mistaken. --Oliver Cromwell

Cromwell's rule, named by statistician Dennis Lindley, states that the use of prior probabilities of 0 or 1 should be avoided, except when applied to statements that are logically true or false. For instance, Lindley would allow us to say that Pr(2+2 = 4) = 1, where Pr represents the probability. In other words, arithmetically, the number 2 added to the number 2 will certainly equal 4.

Submitted by Paul Alper

"[Choosing] what to do in the face of uncertainty is never a scientific question. The science is elucidating the possibilities and the probabilities but how to act and how to react is your own values. And we always have to make that explicit. But if you don’t have any information, it’s hard to know how to apply your values in more than a random way."

Dot Earth blog, New York Times, 20 December, 2013

Submitted by Bill Peterson

"When you find common errors in the scientific literature – such as a simple misinterpretation of p values – hit the perpetrator over the head with your statistics textbook. It’s therapeutic."

Submitted by Paul Alper

“Uncertainty is an uncomfortable position. But certainty is an absurd one."

"I call myself a Possibilian: I'm open to...ideas that we don't have any way of testing right now."

"Possibilism (philosophy), the metaphysical belief that possible things exist"

Submitted by Margaret Cibes

"The more you are cited the better you are. It is self-evident. QED. But is it? Some academic papers are cited to be debunked."

Submitted by Margaret Cibes

"There is a story about two friends, who were classmates in high school, talking about their jobs. One of them became a statistician and was working on population trends. He showed a reprint to his former classmate. The reprint started, as usual, with the Gaussian distribution and the statistician explained to his former classmate the meaning of the symbols for the actual population, for the average population, and so on. His classmate was a bit incredulous and was not quite sure whether the statistician was pulling his leg. "How can you know that?" was his query. "And what is this symbol here?" "Oh," said the statistician, "this is pi." "What is that?" "The ratio of the circumference of the circle to its diameter." "Well, now you are pushing your joke too far," said the classmate, "surely the population has nothing to do with the circumference of the circle."

Communications in Pure and Applied Mathematics, February 1960

Submitted by Margaret Cibes

Forsooth

“The difference in mortality rates [in deaths per 100,000 women] between black women [top curve] and white women [bottom curve] with breast cancer has widened since 1975, in part because black women have not benefited as much from improvements in screening and treatment. Among the states with available data, Tennessee has the largest gap—with nearly 14 black women dying for every one white woman.” [emphasis added]

The phrase in bold appeared in the printed National Edition (p. A22) but was removed without comment from the online version by Dec. 24. None of the 162 online comments on the accompanying article questioned the phrase.

Submitted by Paul Campbell

How cold was it?

"In Minnesota the temperature was predicted to reach -31F (-35C) but meteorologists warned that accompanying wind chill could make it feel twice as cold…"

"Environment Canada is predicting the vast majority of the country will see below-normal temperatures — and snowfall — through March. In Thunder Bay and the Northwest, the probability of this is seen as between 50 and 60 per cent. Which means the weather office could be only half-right."

Submitted by Bill Peterson

The New York Times has printed two alternative covers of the Sunday Times magazine, for random distribution to subscribers around the country. That explains a headline that appeared in the week preceding the Super Bowl: "This Week's Cover Accurately Predicts the Super Bowl Winner (Half of the Time)"

Submitted by James Greenwood

"Oh, aren't people stupid! Do you know the average IQ is only 100? That's terribly low, isn't it? One hundred. It's no wonder the world's in such a mess."

The New Yorker, September 24, 2007, p. 103

Submitted by Margaret Cibes

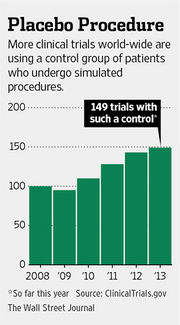

Sham knee surgery study

Common knee surgery does very little for some, study suggests

by Pam Belluck, New York Times, 25 December 2013

The article concerns an arthroscopic procedure to repair a torn meniscus--the cartilage in the knee. According to the article, about 700,000 such surgeries are performed annually, the most for any orthopedic procedure. But is the procedure actually effective? That is the question considered by a study conducted in Finland involving 146 patients at five hospitals. All of the subjects underwent arthroscopic procedures which involved making an incision and inserting a scope to assess the injury. However, at this point, it was randomly determined whether the meniscus would actually be trimmed or if a false blade would be rubbed on the knee cap as a sham treatment. The subjects did not know which treatment they got. In follow-up interviews a year later, comparable proportions of each reported that the procedure had improved their condition, and said that they would have it again, even though it might be a sham treatment.

The following quotation comes from a related story in the Wall Street Journal:

- "Doctors have a bad tendency to confuse what they believe with what they know," said Dr. Järvinen [one of the study's authors], an orthopedic resident and adjunct professor at Helsinki University Central Hospital.

The WSJ article also included the following graphic to illustrate the increasing use of sham surgery as a control.

Submitted by Paul Alper

Discussion

1. We read in the WSJ, "Similar to the practice of giving some patients sugar pills or placebos in drug studies, simulated surgery is among the most rigorous ways to evaluate the effectiveness of surgical procedures." What particular concerns might you have with sham surgical treatments as opposed to less invasive placebo treatments like sugar pills? For more on this, see Sham procedures and the ethics of clinical trials (Journal of the Royal Society of Medicine, December 2004).

2. The WSJ graphic cites ClinicalTrials.gov, which maintains "a registry and results database of publicly and privately supported clinical studies of human participants conducted around the world." On that web site we find the following data on trends over time

| Year | Total Number of Registered Studies | Total Number of Registered Studies with Posted Results |

|---|---|---|

| 2008 | 66,309 | |

| 2009 | 83,481 | 1,822 |

| 2010 | 101,210 | 3,529 |

| 2011 | 119,422 | 5,941 |

| 2012 | 138,960 | 8,768 |

| 2013 | 158,772 | 11,110 |

How do these figures affect your impression of the WSJ graph?

Submitted by Bill Peterson

The Distributome Project

The Distributome Project is a collection of web-based resources for teachers and students of probability, statistics, and partner disciplines.

The heart of Distributome is a digital library of special, parametric probability distributions. Properties of distributions, and relations between distributions, are stored in an XML database. Properties of a distribution include density, distribution, quantile, and generating functions; various moments, such as mean, variance, skewness, kurtosis, and entropy; median and other special quantiles; and so forth. Relations between distributions are classified in various standard ways, such as special cases, transformations on underlying random variables, limits of random variables or parameters, and conditioning.

Built from the database are a number of innovative features and ancillary resources:

- The Navigator is a dynamic, graphical interface in which the distributions are represented as nodes and the relations as edges between nodes. Clicking on a node shows the properties of the distribution, while clicking on an edge shows the relationship between the corresponding distributions.

- Interactive simulators and calculators are available for most common distributions. A simulator illustrates a distributions in a visual and dynamic way, often with the help of randomizing devices (such as coins or balls) or an underlying random process. A calculator can be used to compute values of the distribution function or quantile function.

- The Distributome Blog is a collection of activities that use probability distributions to model and solve problems in a variety of applied areas, often with real and historically interesting data sets. Each activity includes an overview, goals and problems, and hints and solutions. For example, the Homicide Trends Activity explores fitting a Poisson distribution to homicide data, based on a news story from the Columbus Dispatch.

- The Distributome Game challenges students to identify the correct probability distribution for a given problem statement. The game is timed, and is in the form of an array, with problems statements in the rows and distributions in the columns. Various settings are available to custom the game.

The Distributome project is freely available, open-source, and is built on standard web technologies. It is supported through grants from the National Science Foundation (grants 1023115, 1022560, and 1022636).

Submitted by Kyle Siegrist

Some CT averages

"Connecticut's Accountability System: Frequently Asked Questions", 2013

CT's 2013 assessment documents describe the calculations behind some of its figures, calculations that might provoke some interesting class discussions. (Email CibesM@comcast.net for a more detailed account, with references.)

Our Mastery Tests are administered to all public school students in grades 3-8 in three subjects (Mathematics, Reading, Writing) and to students in grades 5 and 8 in one additional subject (Science). For the sake of simplicity, and contrary to fact, assume that all grade 3-8 students take the same standard test forms, and that they do not have any "invalid" or "excluded" test scores. Note that "averaged" refers to the arithmetic mean, and the "GPA" label is mine, not the State Department of Education's.

Individual student test score. Based on a student's individual test score in a single subject, the student's performance is assigned to one of five qualitative categories – Advanced, Goal, Proficient, Basic, or Below Basic. Each category is then translated into one of four index scores – Advanced/Goal (=100), Proficient (=67), Basic (=33), and Below Basic (=0). Note that two performance categories are combined into one index score, and Goal throughout is 88.

Individual student "GPA." A student's index scores in the three/four required subjects are averaged to create a Student IPI (Individual Performance Index).

School "GPA." All of a school's Student IPIs are averaged over all subjects and all grades to create an SPI (School Performance Index). Note that this average of averages aggregates the IPIs of students at different grade levels, not all of whom took Science tests. Also, the SPI is modified by wrapping it into a school classification system – Excelling, Progressing, Transitioning, Review, and Turnaround – depending upon the SPI plus other factors that are said to "apply to schools differently" and to apply to "schools without tested grades."

District "GPA." All of a district's Student IPIs are averaged over all subjects in all six grades to create a DPI (District Performance Index).

Discussion

A stated goal of CT's assessment process is to identify areas of strength/weakness in CT's students/schools/districts, for the ultimate purpose, one would imagine, of improving teaching/learning in subject areas.

1. Which, if any, of these figures might contribute to that goal in a way that would help improve the performance of any of these groups in any subject area?

2. Other than that goal, what purpose, if any, might these figures serve with respect to local municipalities or their economies?

3. Re the individual student test score:

(a) Might there be there anything questionable about the index-scoring procedure or the figures built on it, which are reported to the nearest tenth?

(b) How many ways are there for a student who took four subject tests to reach the Goal of 88?

4. Re the SPI/DPI:

(a) Do you think it's reasonable to compare averages of IPIs, when the IPIs are based on different numbers of tests?

(b) Do you think it's sufficient to provide a single number, such as a mean, to describe the school/district data, or would you want to see some indication of variation, such as variance or even range?

(c) Re the SPI/DPI, why might a school focus on improving the scores of its highest performing students in order to raise these figures, possibly to the detriment of efforts directed toward the academically weaker students? If the "average" had been a "median," would that strategy have been as effective?

Submitted by Margaret Cibes

Dennis Lindley

Leading British statistician, Dennis Lindley, dies

StatsLife.org, 16 December 2013

Dennis Lindley was a leading proponent of Bayesian methods in statistics. The memorial tribute in StatsLife includes this link to 32 minute video of an interview with Lindley.

Section 6.8 of Lindley's book Understanding Uncertainty (Wiley, 2005), is devoted to a discussion of Cromwell's Rule, which was featured in our Quotations above. From Lindley's description:

Bayes rule in its original, probability, form says that

- p(F | E) = p(E | F) p(F) / p(E)

providing p(E) is not zero. ...Suppose that your probability for F were zero, then since multiplication of zero by any number always gives the same result, zero, the right-hand, and hence also the left-hand, sides will always be zero whatever be the evidence E. In other words, if you have probability zero for something, F, you will always have probability zero for it, whatever evidence E you receive. Since, if an event has probability zero, the complementary event always has probability one, then, whatever evidence you receive, you will continue to believe in it. No evidence can possibly shake your strongly held belief.

… As an example of such a result, consider the case of a person who holds a view F with probability 1. Then coherence says that it is no use having a debate with them becausenothing will change their mind [pp. 90-91].

Submitted by Paul Alper

Strong birthday problem

“The strong birthday problem”, by Mario Cortina Borja, Significance, December 2013

This article is a review of the “’strong birthday’ problem as seemingly first defined by Anirban DasBupta in 2005":

It refers to the probability that not just one person but everybody in a group of n individuals has a birthday shared by someone else in the group. Nobody in the group has a lone birthday.

The author’s conclusion:

The answer … is 3064. This is the number of people that need to be gathered together before there is a 50% chance that everyone in the gathering shares his birthday with at least one other.

This issue of Significance also contains a foldout “Timeline of Statistics” and an interview with Nate Silver.

Submitted by Margaret Cibes

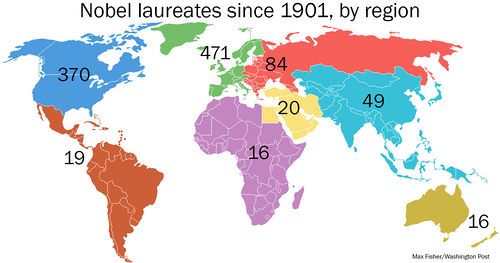

Maps that explain the world

40 more maps that explain the world

by Max Fisher, Washington Post, WorldViews blog, 13 January 2014

This post gives 40 remarkable data maps. They are a sequel to Fisher's original 40 maps (posted August 12, 2013) here. Each map is accompanied by some discussion, and links to the original data.

Here is one example. Map #14 in the newer set is entitled "Who wins Nobel prizes (and who doesn't)." It shows the geographical distribution of Nobel Prize winners since 1901.

Discussion

Fisher writes, "Maps can be a remarkably powerful tool for understanding the world and how it works, but they show only what you ask them to." What does the Nobel map show? What other questions occur to you that it does not answer?

Fisher has further discussion of these data in The amazing history of the Nobel Prize, told in maps and charts (15 October 2013).

Submitted by Paul Alper

How to read a map

On how to read a (good) map

by Dr. Ian Muehlenhaus, Directions Magazine, 27 January 2014

Directions is an industry magazine covering trends in "geospatial information technology." Dr. Muehlenhaus, who contributed this article, teaches of geography and cartography at the University of Wisconsin-La Crosse. He writes, "Just as you shouldn’t trust everything you read or see on television, you should never blindly trust information just because it is on a map." Replace map with statistical study and the same advice applies.

Muehlenhaus goes on to give the following questions to consider when evaluating a map:

- Who made the map?

- What is the purpose of the map? That is, what is the map attempting to communicate?

- Who is the intended audience? (It is important to remember that the map may not have been designed for you, but a more specialized audience.)

- Does the map effectively achieve its communication goals? Does it present an interesting story or argument?

As an example of a good map, the article gives an extended discussion of a NASA map of last fall's Yosemite Rim Fire in California.

Submitted by Bill Peterson

Warren Buffett's billion dollar gamble

In a widely publicized announcement on January 21, 2014, Quicken Loans is offering a billion (sic) dollar prize to any contestant who can fill out the bracket perfectly in the March NCAA basketball tournament. Because they don't have a spare billion in the bank, they have insured against the possibility of a winner with Berkshire Hathaway (BH), paying an undisclosed premium believed to be around 10 million dollars. Is this is good deal for BH? Some relevant data: to win you must predict all 63 game winners correctly. The number of entries is limited to 10 million. The prize is actually 500 million cash (or 1 billion over 40 years). Presumably Warren Buffett asked his actuary "are you very confident that the chance of someone winning is considerably less than 1/50". How would you have answered?

I put this forward as an interesting topic for open-ended classroom discussion. First emphasize that the naive model (each entry has chance 1 in 263 to win) is ridiculous. Then elicit the notions that a better model might involve some combination of

- modeling typical probabilities for individual games

- modeling the strategies used by contestants

- empirical data from similar past forecasting tournaments.

Here are two of many possible lines of thought. (1) The arithmetic

(5 million) × (3/4)63 ≈ 1/14

suggests that if half the contestants are able to consistently predict game winners with chance 3/4, then it's a bad deal for BH. Fortunately for BH this scenario seems inconsistent with past data. Because the same calculation, applied to entries in a similar (but only 1 million dollar prize) ESPN contest last year, says that about

(4 million) × (3/4)32 ≈ 1/14

entries should have predicted all 32 first-round games correctly. But none did (5 people got 30 out of 32 correct).

(2) The optimal strategy, as intuition suggests, is to predict the winner of each game to be the team you think (from personal opinion or external authority) more likely to win. For various reasons, not every contestant does this. For instance, as an aspect of a general phenomenon psychologists call probability matching, a contestant might think that because some proportion of games are won by the underdog, they should bet on the underdog that proportion of times. And there are other reasons (supporting a particular team; personal opinions about the abilities of a subset of teams) why a contestant might predict the higher ranked team in most, but not all, games. So let us imagine, as a purely hypothetical scenario, that each contestant predicts the higher-ranked team to win in all except k randomly-picked games. Then the chance that someone wins the prize is about

Pr(in fact exactly k games won by underdog) × [(10 million) / <math>\tbinom{63}{k} </math> ]

provided the bracketed term is ≪1. This term is ≈ 0.15 for k = 6 and ≈ 0.02 for k = 7. The first term cannot be guessed -- as a student project one could get data from past tournaments to estimate it -- but is surely quite small for k = 6 or 7. This suggests a worst-case hypothetical scenario from BH's viewpoint: that an unusually small number of games are won by the underdog, and that a large proportion of contestants forecast that most games are won by the higher-ranked team. But even in this worst case it seems difficult to imagine the chance of a winner becoming anywhere close to 1/50.

Other estimates

A brief search for other estimates of the chance that an individual skilled forecaster could win the prize finds

- Jeff Bergen of DePaul University asserts this youtube video a 1 in 128 billion chance.

- Ezra Miller of Duke University is quoted as saying a 1 in 1 billion chance.

Neither source explains how these chances were calculated.

Submitted by David Aldous (reproduced from a blog post here).

Confusing dietary advice

Why nutrition is so confusing

by Gary Taubes, New York Times, 8 February 2014

Taubes reports that in 1960, fewer than 13 percent of Americans were obese and only 1 percent were diagnosed as diabetic. At the time, there were fewer than 1100 articles on obesity or diabetes in the indexed medical literature. In the years since then, the obesity percentage has increased by a factor of 3, the diabetes percentage by a factor of 7. And more than 600,000 research articles on these topics have now appeared in the literature.

For a satirical "association is not causation" example, one might suggest that publishing articles on obesity is a public health menace, since it leads to more weight problems in the population. Actually, Taubes's essay takes a more serious stab at the problem. Because heart disease and diabetes unfold on such long time-scales, controlled experiments can be prohibitively expensive if not impossible. Instead, we have observational studies, that can only suggest how various dietary factors might affect health outcomes.

Taubes writes that:

The associations that emerge from these studies used to be known as “hypothesis-generating data,” based on the fact that an association tells us only that two things changed together in time, not that one caused the other. So associations generate hypotheses of causality that then have to be tested. But this hypothesis-generating caveat has been dropped over the years as researchers studying nutrition have decided that this is the best they can do.

Thus,

...we have a field of sort-of-science in which hypotheses are treated as facts because they’re too hard or expensive to test, and there are so many hypotheses that what journalists like to call “leading authorities” disagree with one another daily.

He cites Karl Popper: “The method of science as the method of bold conjectures and ingenious and severe attempts to refute them.” In this formulation, Taubes concludes that research on nutrition is out of balance. It is good at generating conjectures, but in need of more vigorous efforts at refuting them.

Submitted by Bill Peterson