Chance News 9: Difference between revisions

(→item1) |

|||

| (138 intermediate revisions by 9 users not shown) | |||

| Line 1: | Line 1: | ||

The size of this page is <wiki:PageSize /> bytes. | |||

==Quotation== | ==Quotation== | ||

<blockquote> Mathematics is not an opinion. <br>Anonymous.</blockquote> | |||

==Forsooth== | ==Forsooth== | ||

Forsooth items should be | Here are some Forsooth items from the October issue of RSS News. | ||

== | <blockquote> But a good degree can make all the difference..to the prospect of actually being able to pay off that massive student debt-- the majority of students now graduate owing a crushing 13,501 pounds. </blockquote> | ||

<div align="right">The Times (Body & Soul supplement: p10)<br> | |||

23 April 2005</div> | |||

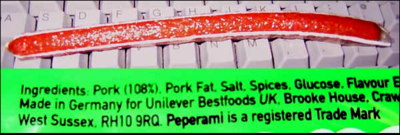

The RRS Forsooth also included picture of a Peperami sausage label that started their ingredients with Pork (108%). | |||

The Blogs had great fun with this forsooth. [http://www.gamedev.net/community/forums/topic.asp?topic_id=305589 Benbryves] a member of [http://www.gamedev.net Game Dev.net] gave the following picture from the back of the Peperamii sausage that he bought a Sainsbury's supermarket | |||

<center>[[Image:peperami.png|400px|Ingrediants of Peperami sausage.]] | |||

</center> | |||

Droid Young on the [http://forums.teamphoenixrising.net/archive/index.php/t-30669.html Team Phoenix Rising Forums website] said that he wrote to the company and got the following answer: | |||

<blockquote>Dear Mr Young | |||

Thank you for your enquiry. | |||

Peperami is a salted and cured spicy meat snack. | |||

The weight of the raw meat going into Peperami exceeds the weight of the end product because the recipe loses moisture, and therefore loses weight, during the fermentation, drying and smoking process. | |||

Please contact us again if we can be of any further assistance. | |||

Kind regards</blockquote> | |||

DISCUSSION: | |||

Does the company answer seem reasonable to you? | |||

==Supreme Court Nominee Alito: Statistics Misleading?== | |||

An [http://www.salon.com/politics/war_room/2005/10/31/jury/index.html article] in Salon.com for October 31 discusses the case [http://caselaw.lp.findlaw.com/scripts/getcase.pl?court=3rd&navby=case&no=989009v3&exact=1 Riley v. Taylor]. The plaintiff Riley, convicted at trial of first-degree murder, was African-American; at the trial, the prosecution used its peremptory challenges to eliminate all three of the African-Americans on the jury panel. In the same county that year, there were three other first-degree murder trials, and in every one of those cases all of the African-American jurors were struck. | |||

Riley appealed his conviction. A majority of the judges on the appeals court thought that there was evidence that jurors were struck for racial reasons. According to them, a simple calculation indicates that there should have been five African-American jurors amongst the forty-eight that were empanelled in the four cases. However, there were none. To these judges, this was clear evidence of racial motivation in the striking of such jurors. | |||

Judge Alito dissented. He called the majority's analysis simplistic, and stated that although only 10% of the U.S. population is left-handed, five of the last six people elected president of the United States were left-handed. He asked rhetorically whether this indicated bias against right-handers amongst the U.S. electorate. | |||

The majority responded that there is no provision in the Constitution that protects persons from discrimination based on whether they are right-handed or left-handed, and that to compare these cases with the handedness of presidents ignores the history of racial discrimination in the United States. | |||

===Questions=== | |||

1) Assuming that Judge Alito is correct about the proportion of left-handers in the U.S. population, what is the probability that five of the last six presidents elected would be left-handed? | |||

2) Is Judge Alito's comparison biased by the fact that he chose just the last six presidents? Presumably he believed that the president just before this group was right-handed, otherwise he would have included him in the sample and said "six of the last seven presidents." What is the relevant statistic? | |||

3) When the decision came down in 2001, the last six people elected president were George W. Bush, Clinton, George H. W. Bush, Reagan, Carter and Nixon. Of these, Clinton and George H. W. Bush were left-handed. Ford, who was left-handed, was not elected president (or even vice-president); he became president upon the resignation of Richard Nixon. Reagan may have been left-handed as a child, but he wrote right-handed so his case isn't clear; the [http://www.reagan.utexas.edu/archives/reference/facts.html Reagan Presidential Library] says that he was "generally right-handed." Judge Alito may have been confused, including on his list Ford (who was not elected) and George W. Bush (who is not left-handed, although his father is), as well as Reagan. The other left-handed presidents were Truman and Garfield. Hoover is found on some lists of left-handed presidents, but according to the [http://home.comcast.net/~sharonday7/Presidents/AP060306.htm Hoover Institution], he was not left-handed. How does this information affect Alito's argument? | |||

4) Suppose that 5/48 of the jury pool were African-American. What is the probability that no juror amongst the 48 selected would have been African-American? How does this compare with the actual statistics of left-handed presidents? Does the same objection apply to this case as might apply to Alito's example? | |||

5) What is your opinion? Is there clear statistical evidence of racial bias in the use of peremptory challenges in this county? | |||

6) What does it say about our judiciary that Judge Alito could get his facts as wrong as he did, and that none of the judges in the majority caught the errors? | |||

Contributed by Bill Jefferys | |||

==Who wants Airbags== | |||

Who wants Airbags?<br> | |||

Chance Magazine, Spring 2005<br> | |||

Mary C. Meyer and Tremik Finney | |||

This article is available from the [http://www.amstat.org/publications/chance/ Chance Magazine] web site under "Feature Articles". The data for this study is available http://www.stat.uga.edu/~mmeyer/airbags.htm here. | |||

Here is the author's description of their study. | |||

<blockquote> Airbags are known to save lives. Airbags are also known to kill people. It is widely believed that the balance is in favor of airbags. Government and private studies have shown a statistically significant beneficial effect of presence of airbags on the probability of surviving an accident. However, our study suggests the opposite: that airbags have been killing more people than they have been saving. <br><br> | |||

The National Highway Traffic Safety Administration (NHTSA) keeps track of deaths due to airbags; you can find a list of deaths on the NHTSA web site, along with conditions under which these deaths occurred. Each death occurred in a low speed collision, and for each, there is no other possible cause of death. Is it reasonable to assume that airbags can kill people only at low speeds? Isn't it more likely that airbags also kill people at higher speeds, but the death may be attributed to the crash? In this study we compare fatality rates for occupants with airbags available to fatality rates for occupants without airbags, controlling for possible confounding factors such as seatbelt use, impact speed, and direction of impact. What effect does the presence of an airbag have on the probability of death in a crash, under various conditions? <br><br> | |||

The main difference between our study and the previous studies is the choice of the dataset. We use the NASS CDS database, which is a stratified random sample of crashes nationwide. Previous studies showing beneficial effects of airbags have all used the FARS database, which contains data for all crashes in which a fatality occurred. We can limit our analyses to a subset of the NASS CDS database, choosing only crashes where there was at least one fatality; this should be a random sample of the FARS database. When we perform the analyses on this subsample, we can reproduce the results of previous studies: in accidents in which a fatality occurs, airbags are beneficial. However, for the entire random sample of crashes, they increase rather than decrease the probability of death. <br><br> | |||

Here is an analogy to help understand this: If you look at people who have cancer, radiation treatment will improve their probability of survival. However, radiation treatment is dangerous and can actually cause cancer. Making everyone in the country have airbags and measuring effectiveness only in the fatality group, is like making everyone have radiation treatment and looking only at the cancer group to check efficacy. Within the cancer group, radiation will be found to be effective, but there will be more deaths on the whole. <br><br> | |||

This is what seems to be happening with airbags. In a severe accident, airbags can save lives. However, they are inherently dangerous and pose a risk to the occupant. Our analyses show that in lower-speed crashes, the occupant is significantly more likely to die with an airbag than without. This effect is not seen in the analysis using FARS, because this database does not contain information about low-speed crashes without deaths. <br><br> | |||

Our analysis shows that previous estimates of airbag effectiveness are flawed, because a limited database was used. We have demonstrated this by reproducing their results, using a subsample of the CDS data. The new estimates of effectiveness suggest that airbags are not the lifesaving devices we have believed them to be.</blockquote> | |||

Of course one should ask what the National Traffic Administration thinks of this. The Journal asked them to respond and they did so. Their response by Charles Kahane was that the FARS data should be used since the CDC data that the authors used was too small to obtain significant results. | |||

The authors of the Chance Magazine article replied that they were able to get significant results using the CDC data and the usiing the FARS data would be answering the wrong question. They write: | |||

<blockquote>If a front-seat occupant wishes to ask the question, "If I get in an accident, am I less likely or mare likely to die, if I have an airbag?" The proper way to answer this question is with the CDS dataset. Withe the FARS dataset, the question one can answer is. "If I get in a highway accident in which there is at least one fatality, am I less likely or more likely to die, if I have an airbag?</blockquote> | |||

The article itself does not include this exchange but you can read it [http://chance.dartmouth.edu/chancewiki/index.php/Image:Airbagresponse.pdf here]. | |||

This would be a great article to discuss in a statistics class. The authors clearly explain the methods they used and why they used them. Since they have provided the data for their students students could use this for their own analysis. | |||

Submitted by Laurie Snell | |||

==Pick a number, any number== | |||

[http://www.economist.com/World/europe/displayStory.cfm?story_id=5115147&tranMode=none Problems with measures of government performance and productivity], The Economist, Nov 3rd 2005.<br> | |||

[http://www.statscom.org.uk/media_pdfs/reports/PSAreport%2BAnnexA.pdf PSA targets: the devil in the detail], (UK) Statistics Commission, October 2005 - you can [mailto:Allen.Ritchie@Statscom.org.uk comment of the report] up to the end of this month (Nov 2005). | |||

A recent report from the Statistics Commission, an independent body that monitors UK official statistics, | |||

claims that many Government performance targets are seriously flawed. | |||

These Public Service Agreement (PSA) targets by, and for, | |||

government departments are intended to measure | |||

the desired outcomes from Government policies, in particular spending policies. | |||

There are a total of 102 separate PSA targets that were set in the 2004 Spending Review. | |||

Some were critisised for being incomprehensibly complex, | |||

such as the 23 separate indicators for British research innovation performance, | |||

each with its own target and four milestones. | |||

The report says some targets would be missed if a single case falls below a given threshold. | |||

For example, if even one school out of 3,400 fails to bring half | |||

its pupils up to scratch in each of English, maths and science, then that target would be missed. | |||

In other cases, the necessary data doesn't exist. | |||

A frequently-voiced criticism of the targets associated with previous spending reviews | |||

was that many had been defined without sufficient consideration of the Government’s | |||

ability to measure performance against them. | |||

That is, attempts to quantify some aspirational targets lead to the creation of targets | |||

that seems artifical or forced. | |||

It is however obvious that the availability, credibility | |||

and validity of data indicating progress against these targets is fundamental to trust in the | |||

integrity of the target-setting process. The Commission is therefore concerned to assess | |||

whether the statistical evidence to support PSAs is adequate. | |||

The question of whether the statistical underpinnings are adequate can only be answered ‘bottom up’ by | |||

reviewing each and every target. | |||

In view of the importance of PSA targets, the Commission recommends that more consideration | |||

should be given to the adequacy of the statistical infrastructure to support their | |||

future evolution. | |||

<blockquote> | |||

What is needed is a robust cross-government planning system | |||

for official statistics that can pick up the future data requirements (to support the | |||

setting of targets) at the earliest possible stage and feed those effectively into the | |||

allocation of departmental resources. | |||

</blockquote> | |||

The Commission recommends that government departments should pay more attention to data quality issues. | |||

It quotes The National Audit Office which says | |||

<blockquote> | |||

the allocation of clear responsibility for data quality and active | |||

management oversight of data systems would reinforce the importance of data quality. | |||

</blockquote> | |||

The Royal Statistical Society has produced a report which comments on | |||

[http://www.rss.org.uk/PDF/PerformanceMonitoring.pdf aspects of the PSA targets]. | |||

===Further reading=== | |||

[http://www.rss.org.uk/PDF/PerformanceMonitoring.pdf Performance Indicators: Good, Bad and Ugly], | |||

Royal Statistical Society - Working Party on Performance Monitoring in the | |||

Public Services, which stresses that performance monitoring when done badly can | |||

be very costly, not merely ineffective but harmful and indeed destructive. | |||

Submitted by John Gavin. | |||

==A roulette winner== | |||

[http://www.guardian.co.uk/spain/article/0,2763,1245856,00.html Judge rules for Madrid gambler]<br>The Guardian, June 24, 2004<br> | |||

Ben Sills in Granada | |||

[http://www.theage.com.au/articles/2004/06/24/1087845018860.html Court backs gambler]<br> | |||

The Age Madrid, June 24,2004 | |||

Since 1990 Gonzalo Garcia-Pelayo with the help of friends and relatives made a statistical study of the numbers winning numbers in roulette in the Casino Gran Madrid. He found that some numbers were winning numbers as often as once in every 28 throws apparently due to imperfections in the wheel, floors not being level, irregularly sized ball slots etc. | |||

The article reports that Gonzalo used his analysis to win more than a million euros during a two year run. From another article we learn that Gonzalo was denied entry to the casino in 1992 but the government in 1994 overruled the casino. The casino they tried to get the courts to uphold their right to not let Gonzalo enter the casino. For ten years the case went through the courts and in 2004 Spain's Supreme Court ruled against the casino. | |||

According to the The Age article: | |||

<blockquote> The Supreme Court ruling said Garcia-Pelayo and company used "ingenuity and computer techniques. ˇThat's all."</blockquote> | |||

The Guardian reports: | |||

But despite this week's ruling he has no plans to return to Madrid's roulette tables. "I'm too well known," he said. Instead he plans to sue the casino for 1.2 million euros in lost earnings. | |||

Gonzalo's story was the subject of a History Channel program and is available from their [http://store.aetv.com/html/subject/index.jhtml?id=cat830005 website] as a DVD. | |||

This was a part of the History Channel's "Breaking Vegas" series of 13 programs, which tell the stories of Ed Thorp, the MIT GROUP, and others who have tried to beat the casinos. You can read a review of this series [http://www.pokertv.com/articles/paulkammen/history_channel_breaking_vegas.html here]. | |||

==Battle of the sexes== | |||

[http://education.guardian.co.uk/higher/news/story/0,,1635507,00.html Who has the bigger brain?], The Observer, Sunday November 6, 2005.<br> | |||

[http://news.bbc.co.uk/1/hi/education/4183166.stm 'Men cleverer than women' claim ], BBC News on-line<br> | |||

[http://www.dailymail.co.uk/pages/live/articles/news/news.html?in_article_id=360291&in_page_id=1770 The great intellectual divide], by Fiona McCray, Daily Mail, 25th August 2005.<br> | |||

[http://education.guardian.co.uk/higher/research/story/0,9865,1556362,00.html Men 'grasping at straws' over intelligence claims ], Donald MacLeod, The Guardian, August 25, 2005. <br> | |||

[http://www.thes.co.uk/current_edition/story.aspx?story_id=2024132 IQ claim will fuel gender row], Phil Baty, The Times Higher Educational Supplement, 26 August 2005. | |||

In August, Dr Paul Irwing and Professor Richard Lynn announced that | |||

men are significantly cleverer than women and | |||

that male university students outstrip females by almost five IQ points. | |||

Their research was based on IQ tests given to 80,000 people and a further study of 20,000 students. | |||

The academics used a test which is said to measure "general cognitive ability" - spatial and verbal ability. | |||

As intelligence scores among the study group rose, | |||

they claim they found a widening gap between the sexes. | |||

For example, there were twice as many men with IQ scores of 125, | |||

a level said to correspond with people getting first-class degrees. | |||

At scores of 155, associated with genius, there were 5.5 men for every woman. | |||

Their work is based on a technique known as meta-analysis. | |||

They examined dozens of previous studies of men's and women's IQs, | |||

research that had been carried out in different countries | |||

between 1964 and 2004 and published in a variety of different journals. | |||

Then they subjected these studies to an intense statistical analysis. | |||

Dr Irwing told The Times | |||

<blockquote> | |||

The differences may go some way to explaining the greater numbers of men | |||

achieving distinctions of various kinds, such as chess grandmasters, | |||

Fields medallists for mathematics, | |||

Nobel prize-winners and the like. | |||

</blockquote> | |||

But just recently, the work of the two academics was attacked | |||

in unusually strong terms in the journal Nature, | |||

just as a paper officially outlining their work was published in the British Journal of Psychology. | |||

Tim Lincoln from Nature justified the attack by saying | |||

<blockquote> | |||

We were made aware that Irwing and Lynn's results were based on a seriously flawed methodology, | |||

and had the opportunity to provide timely expert opinion when their paper became publicly available. | |||

</blockquote> | |||

The author of the Nature paper, Dr Steve Blinkhorn, went futher | |||

<blockquote> | |||

Their study - which claims to show major sex differences in IQ - is simple, utter hogwash. | |||

</blockquote> | |||

Blinkhorn said the pair were ignoring a vast body of work that had found no differences. | |||

In particular, Blinkhorn said | |||

<blockquote> | |||

they chose to ignore a massive study, carried out in Mexico, | |||

which showed there was very little difference in the IQs of men and women. | |||

They say it is "an outlier" in data terms --in other words, it was a statistical freak,'. | |||

It was nothing of the kind. It was just plain inconvenient. | |||

Had it been included, as it should have been, | |||

it would have removed a huge chunk of the differences they claim to have observed. | |||

</blockquote> | |||

He goes on to say | |||

<blockquote> | |||

Psychologists often carry out studies that find no differences between | |||

men's and women's IQs but don't publish them for the simple reason | |||

that finding nothing seems uninteresting. | |||

But you have to take these studies into account as well as | |||

those studies that do find differences. But Lynn and Irwing did not. | |||

That also skewed their results. | |||

</blockquote> | |||

Blinkhorn also accuses the pair of adopting a variety of statistical manoeuvres | |||

that he describes, in his paper, as being 'flawed and suspect'. | |||

Last week Irwing defended the study saying | |||

<blockquote> | |||

The study they had done also has to be seen in context of our other work | |||

which has shown significant sex differences in IQ. | |||

Nor is it true that we played about with our data. | |||

</blockquote> | |||

Blinkhorn is unrepentant and states in Nature. | |||

<blockquote> | |||

Sex differences in average IQ, if they exist at all, are too small to be interesting. | |||

</blockquote> | |||

The Guardian article finishes by highlighting other recent rows | |||

that have erupted over papers in leading journals. | |||

<ul> | |||

<li> | |||

In 1998, Andrew Wakefield caused a furore | |||

when he wrote an article in the Lancet claiming a link between autism and the MMR vaccine. | |||

The paper led to a boycott of the vaccine by many parents in the UK, | |||

although scientists have been unable to establish any of his claims. | |||

Critics attacked the Lancet for publishing the paper. | |||

<li> | |||

The Lancet journal was also criticised by Nobel laureate Aaron Klug for printing a paper claiming the immune systems of rats were damaged after they were fed genetically modified potatoes. The claims have never been substantiated. | |||

<li> | |||

In contrast, last year's Nature paper, | |||

in which scientists revealed they had found remains of a race of tiny apemen, Homo floresiensis, | |||

has survived scrutiny despite claims that the fossils really belonged to deformed Homo sapiens. | |||

Research has since confirmed the original paper's results. | |||

</ul> | |||

Perhaps not too surprisingly, the more female orientated | |||

Daily Mail highlights a competing survey. | |||

This time 1,500 women were surveyed by Perfectil, which makes health supplements. | |||

Highlighted results include: | |||

<ul> | |||

<li> Women are taking the upper hand in relationships and growing in confidence about their looks. | |||

<li> Almost two-thirds say they would ask a man to marry them rather than wait for him to pop the question, and one in three say they are comfortable chatting men up. | |||

<li> As for sexual attraction, women are quick to know what they want. | |||

Twelve per cent say they know within ten seconds of | |||

starting a conversation with a man whether or not they fancy him. | |||

The majority make up their minds within five minutes. | |||

<li> It found that women are becoming less and less bothered by wrinkles. | |||

Two in five want to age gracefully. | |||

<li> Asked why they thought men were becoming obsessed with their looks, | |||

88 per cent of the women said it was because | |||

men know they cannot be complacent as their wives or girlfriends may lose interest in them. | |||

</ul> | |||

===Questions=== | |||

<ul> | |||

<li> Does the fact that Perfectil make health supplements influence your opinion of their survey's results? What additional information might you ask for to be sure their results are not biased? | |||

</ul> | |||

Submitted by John Gavin. | |||

==Statistical Prediction Rules== | |||

In "Epistemology and the Psychology of Human Judgement" (2005) Oxford University Press, Michael Bishop and J.D Trout argue that we need a new tent for various mathematical, computational, philosophical and psychological results called "Ameliorative Psychology" whose task would be to help people in their everyday reasoning by teaching them some simple-to-use statistical prediction rules (SPRs) that are demonstrably better than relying upon non-statistical rules (e.g., personal and clinical intuition). | |||

It is argued that the identification and justification of statistical prediction rules is the proper task of epistimology and would make the discipline practically relevant to the overall goals of "Amelioriative Psychology". Bishop and Trout see SPR's as a strategic route by which the discipline of (applied) epistemology might become more practically relevant to improving the success of everyday reasoning. | |||

===Marital Happiness SPR=== | |||

The Marital Happiness SPR is "a low-cost reasoning strategy for predicting marital happiness. Take the couple's rate of love making and subtract from it their rate of fighting. If the couple makes love more than they fight, then they will probably report being happy; if they fight more often then they make love, then they will probably report being unhappy." (p. 30) | |||

The procedural version of this SPR might look like this: | |||

if ((#L - #F) >= 0) | |||

report Happy | |||

else | |||

report UnHappy | |||

There is apparently good empirical evidence for this rule and one might therefore argue that teaching this rule to the general public might be a worthy goal of "Ameloriative Psychology" - it would offer reliable and simple-to-use quantitaive guidance when reasoning about the causes and correlates of marital happiness. | |||

===SPRs are successful in the long run=== | |||

Statistical prediction rules are useful for the other girl/guy. We often feel that 1) our clinical judgement in a particular situation is very good or 2) the present case is different in some important respect that obviates the need for a statistical prediction rule to reason about this particular instance. Bishop and Trout caution about overconfidence bias in our abilities to predict the future better than an SPR. Also, the occasional and convenient use of an SPR renders the assumptions of its use invalid. | |||

===What Paul Meehl hath wrought upon us=== | |||

The authors frequently cite the work of [http://www.tc.umn.edu/~pemeehl/ Paul Meehl]. Paul Meehl, who died in 2003, was a clinical psychology methodologist who long advocated the use of statistical prediction rules in clinical reasoning instead of relying on "clinical intuition". His ground breaking research in the 50's showed that SPR's generally out-performed clinicical psychologists in diagnostic decision making. | |||

Meelh, P. 1954. Clinical versus Statististical Prediction: A Theoretical Analysis and a Review of the Evidence. Minneapolis: University of Minnesota Press. | |||

Bishop and Trout's work can be viewed as generalizing Meehl's epistemic/methodological conclusions and recommendations beyond the domain of clinical psychology to the task of using SPRs to improve everyday reasoning more generally. | |||

===Further properties of SPRs=== | |||

Applied epistemologists should not be concerned with helping to formulate and teach SPRs for solving trivial problems. Users should be able to readily use SPRs for solving significant everyday problems. Recommended SPRs should be fairly robust in the face of small differences in the nature of the problem. An SPR should be more reliable than other methods of prediction for that domain; if not, it should be more feasible to use than a potentially superior method of prediction. This cost/benefit approach to indentifying, teaching, and using SPRs is called "Strategic Reliabilism" and is the foundational epistemological theory Bishop and Trout use to justify their recommendations. | |||

Latest revision as of 16:02, 7 May 2008

The size of this page is <wiki:PageSize /> bytes.

Quotation

Mathematics is not an opinion.

Anonymous.

Forsooth

Here are some Forsooth items from the October issue of RSS News.

But a good degree can make all the difference..to the prospect of actually being able to pay off that massive student debt-- the majority of students now graduate owing a crushing 13,501 pounds.

23 April 2005

The RRS Forsooth also included picture of a Peperami sausage label that started their ingredients with Pork (108%).

The Blogs had great fun with this forsooth. Benbryves a member of Game Dev.net gave the following picture from the back of the Peperamii sausage that he bought a Sainsbury's supermarket

Droid Young on the Team Phoenix Rising Forums website said that he wrote to the company and got the following answer:

Dear Mr Young

Thank you for your enquiry.

Peperami is a salted and cured spicy meat snack.

The weight of the raw meat going into Peperami exceeds the weight of the end product because the recipe loses moisture, and therefore loses weight, during the fermentation, drying and smoking process.

Please contact us again if we can be of any further assistance.

Kind regards

DISCUSSION:

Does the company answer seem reasonable to you?

Supreme Court Nominee Alito: Statistics Misleading?

An article in Salon.com for October 31 discusses the case Riley v. Taylor. The plaintiff Riley, convicted at trial of first-degree murder, was African-American; at the trial, the prosecution used its peremptory challenges to eliminate all three of the African-Americans on the jury panel. In the same county that year, there were three other first-degree murder trials, and in every one of those cases all of the African-American jurors were struck.

Riley appealed his conviction. A majority of the judges on the appeals court thought that there was evidence that jurors were struck for racial reasons. According to them, a simple calculation indicates that there should have been five African-American jurors amongst the forty-eight that were empanelled in the four cases. However, there were none. To these judges, this was clear evidence of racial motivation in the striking of such jurors.

Judge Alito dissented. He called the majority's analysis simplistic, and stated that although only 10% of the U.S. population is left-handed, five of the last six people elected president of the United States were left-handed. He asked rhetorically whether this indicated bias against right-handers amongst the U.S. electorate.

The majority responded that there is no provision in the Constitution that protects persons from discrimination based on whether they are right-handed or left-handed, and that to compare these cases with the handedness of presidents ignores the history of racial discrimination in the United States.

Questions

1) Assuming that Judge Alito is correct about the proportion of left-handers in the U.S. population, what is the probability that five of the last six presidents elected would be left-handed?

2) Is Judge Alito's comparison biased by the fact that he chose just the last six presidents? Presumably he believed that the president just before this group was right-handed, otherwise he would have included him in the sample and said "six of the last seven presidents." What is the relevant statistic?

3) When the decision came down in 2001, the last six people elected president were George W. Bush, Clinton, George H. W. Bush, Reagan, Carter and Nixon. Of these, Clinton and George H. W. Bush were left-handed. Ford, who was left-handed, was not elected president (or even vice-president); he became president upon the resignation of Richard Nixon. Reagan may have been left-handed as a child, but he wrote right-handed so his case isn't clear; the Reagan Presidential Library says that he was "generally right-handed." Judge Alito may have been confused, including on his list Ford (who was not elected) and George W. Bush (who is not left-handed, although his father is), as well as Reagan. The other left-handed presidents were Truman and Garfield. Hoover is found on some lists of left-handed presidents, but according to the Hoover Institution, he was not left-handed. How does this information affect Alito's argument?

4) Suppose that 5/48 of the jury pool were African-American. What is the probability that no juror amongst the 48 selected would have been African-American? How does this compare with the actual statistics of left-handed presidents? Does the same objection apply to this case as might apply to Alito's example?

5) What is your opinion? Is there clear statistical evidence of racial bias in the use of peremptory challenges in this county?

6) What does it say about our judiciary that Judge Alito could get his facts as wrong as he did, and that none of the judges in the majority caught the errors?

Contributed by Bill Jefferys

Who wants Airbags

Who wants Airbags?

Chance Magazine, Spring 2005

Mary C. Meyer and Tremik Finney

This article is available from the Chance Magazine web site under "Feature Articles". The data for this study is available http://www.stat.uga.edu/~mmeyer/airbags.htm here.

Here is the author's description of their study.

Airbags are known to save lives. Airbags are also known to kill people. It is widely believed that the balance is in favor of airbags. Government and private studies have shown a statistically significant beneficial effect of presence of airbags on the probability of surviving an accident. However, our study suggests the opposite: that airbags have been killing more people than they have been saving.

The National Highway Traffic Safety Administration (NHTSA) keeps track of deaths due to airbags; you can find a list of deaths on the NHTSA web site, along with conditions under which these deaths occurred. Each death occurred in a low speed collision, and for each, there is no other possible cause of death. Is it reasonable to assume that airbags can kill people only at low speeds? Isn't it more likely that airbags also kill people at higher speeds, but the death may be attributed to the crash? In this study we compare fatality rates for occupants with airbags available to fatality rates for occupants without airbags, controlling for possible confounding factors such as seatbelt use, impact speed, and direction of impact. What effect does the presence of an airbag have on the probability of death in a crash, under various conditions?

The main difference between our study and the previous studies is the choice of the dataset. We use the NASS CDS database, which is a stratified random sample of crashes nationwide. Previous studies showing beneficial effects of airbags have all used the FARS database, which contains data for all crashes in which a fatality occurred. We can limit our analyses to a subset of the NASS CDS database, choosing only crashes where there was at least one fatality; this should be a random sample of the FARS database. When we perform the analyses on this subsample, we can reproduce the results of previous studies: in accidents in which a fatality occurs, airbags are beneficial. However, for the entire random sample of crashes, they increase rather than decrease the probability of death.

Here is an analogy to help understand this: If you look at people who have cancer, radiation treatment will improve their probability of survival. However, radiation treatment is dangerous and can actually cause cancer. Making everyone in the country have airbags and measuring effectiveness only in the fatality group, is like making everyone have radiation treatment and looking only at the cancer group to check efficacy. Within the cancer group, radiation will be found to be effective, but there will be more deaths on the whole.

This is what seems to be happening with airbags. In a severe accident, airbags can save lives. However, they are inherently dangerous and pose a risk to the occupant. Our analyses show that in lower-speed crashes, the occupant is significantly more likely to die with an airbag than without. This effect is not seen in the analysis using FARS, because this database does not contain information about low-speed crashes without deaths.

Our analysis shows that previous estimates of airbag effectiveness are flawed, because a limited database was used. We have demonstrated this by reproducing their results, using a subsample of the CDS data. The new estimates of effectiveness suggest that airbags are not the lifesaving devices we have believed them to be.

Of course one should ask what the National Traffic Administration thinks of this. The Journal asked them to respond and they did so. Their response by Charles Kahane was that the FARS data should be used since the CDC data that the authors used was too small to obtain significant results.

The authors of the Chance Magazine article replied that they were able to get significant results using the CDC data and the usiing the FARS data would be answering the wrong question. They write:

If a front-seat occupant wishes to ask the question, "If I get in an accident, am I less likely or mare likely to die, if I have an airbag?" The proper way to answer this question is with the CDS dataset. Withe the FARS dataset, the question one can answer is. "If I get in a highway accident in which there is at least one fatality, am I less likely or more likely to die, if I have an airbag?

The article itself does not include this exchange but you can read it here.

This would be a great article to discuss in a statistics class. The authors clearly explain the methods they used and why they used them. Since they have provided the data for their students students could use this for their own analysis.

Submitted by Laurie Snell

Pick a number, any number

Problems with measures of government performance and productivity, The Economist, Nov 3rd 2005.

PSA targets: the devil in the detail, (UK) Statistics Commission, October 2005 - you can comment of the report up to the end of this month (Nov 2005).

A recent report from the Statistics Commission, an independent body that monitors UK official statistics, claims that many Government performance targets are seriously flawed. These Public Service Agreement (PSA) targets by, and for, government departments are intended to measure the desired outcomes from Government policies, in particular spending policies. There are a total of 102 separate PSA targets that were set in the 2004 Spending Review.

Some were critisised for being incomprehensibly complex, such as the 23 separate indicators for British research innovation performance, each with its own target and four milestones. The report says some targets would be missed if a single case falls below a given threshold. For example, if even one school out of 3,400 fails to bring half its pupils up to scratch in each of English, maths and science, then that target would be missed. In other cases, the necessary data doesn't exist.

A frequently-voiced criticism of the targets associated with previous spending reviews was that many had been defined without sufficient consideration of the Government’s ability to measure performance against them. That is, attempts to quantify some aspirational targets lead to the creation of targets that seems artifical or forced.

It is however obvious that the availability, credibility and validity of data indicating progress against these targets is fundamental to trust in the integrity of the target-setting process. The Commission is therefore concerned to assess whether the statistical evidence to support PSAs is adequate. The question of whether the statistical underpinnings are adequate can only be answered ‘bottom up’ by reviewing each and every target.

In view of the importance of PSA targets, the Commission recommends that more consideration should be given to the adequacy of the statistical infrastructure to support their future evolution.

What is needed is a robust cross-government planning system for official statistics that can pick up the future data requirements (to support the setting of targets) at the earliest possible stage and feed those effectively into the allocation of departmental resources.

The Commission recommends that government departments should pay more attention to data quality issues. It quotes The National Audit Office which says

the allocation of clear responsibility for data quality and active management oversight of data systems would reinforce the importance of data quality.

The Royal Statistical Society has produced a report which comments on aspects of the PSA targets.

Further reading

Performance Indicators: Good, Bad and Ugly, Royal Statistical Society - Working Party on Performance Monitoring in the Public Services, which stresses that performance monitoring when done badly can be very costly, not merely ineffective but harmful and indeed destructive.

Submitted by John Gavin.

A roulette winner

Judge rules for Madrid gambler

The Guardian, June 24, 2004

Ben Sills in Granada

Court backs gambler

The Age Madrid, June 24,2004

Since 1990 Gonzalo Garcia-Pelayo with the help of friends and relatives made a statistical study of the numbers winning numbers in roulette in the Casino Gran Madrid. He found that some numbers were winning numbers as often as once in every 28 throws apparently due to imperfections in the wheel, floors not being level, irregularly sized ball slots etc.

The article reports that Gonzalo used his analysis to win more than a million euros during a two year run. From another article we learn that Gonzalo was denied entry to the casino in 1992 but the government in 1994 overruled the casino. The casino they tried to get the courts to uphold their right to not let Gonzalo enter the casino. For ten years the case went through the courts and in 2004 Spain's Supreme Court ruled against the casino.

According to the The Age article:

The Supreme Court ruling said Garcia-Pelayo and company used "ingenuity and computer techniques. ˇThat's all."

The Guardian reports:

But despite this week's ruling he has no plans to return to Madrid's roulette tables. "I'm too well known," he said. Instead he plans to sue the casino for 1.2 million euros in lost earnings.

Gonzalo's story was the subject of a History Channel program and is available from their website as a DVD.

This was a part of the History Channel's "Breaking Vegas" series of 13 programs, which tell the stories of Ed Thorp, the MIT GROUP, and others who have tried to beat the casinos. You can read a review of this series here.

Battle of the sexes

Who has the bigger brain?, The Observer, Sunday November 6, 2005.

'Men cleverer than women' claim , BBC News on-line

The great intellectual divide, by Fiona McCray, Daily Mail, 25th August 2005.

Men 'grasping at straws' over intelligence claims , Donald MacLeod, The Guardian, August 25, 2005.

IQ claim will fuel gender row, Phil Baty, The Times Higher Educational Supplement, 26 August 2005.

In August, Dr Paul Irwing and Professor Richard Lynn announced that men are significantly cleverer than women and that male university students outstrip females by almost five IQ points. Their research was based on IQ tests given to 80,000 people and a further study of 20,000 students.

The academics used a test which is said to measure "general cognitive ability" - spatial and verbal ability. As intelligence scores among the study group rose, they claim they found a widening gap between the sexes. For example, there were twice as many men with IQ scores of 125, a level said to correspond with people getting first-class degrees. At scores of 155, associated with genius, there were 5.5 men for every woman.

Their work is based on a technique known as meta-analysis. They examined dozens of previous studies of men's and women's IQs, research that had been carried out in different countries between 1964 and 2004 and published in a variety of different journals. Then they subjected these studies to an intense statistical analysis.

Dr Irwing told The Times

The differences may go some way to explaining the greater numbers of men achieving distinctions of various kinds, such as chess grandmasters, Fields medallists for mathematics, Nobel prize-winners and the like.

But just recently, the work of the two academics was attacked in unusually strong terms in the journal Nature, just as a paper officially outlining their work was published in the British Journal of Psychology. Tim Lincoln from Nature justified the attack by saying

We were made aware that Irwing and Lynn's results were based on a seriously flawed methodology, and had the opportunity to provide timely expert opinion when their paper became publicly available.

The author of the Nature paper, Dr Steve Blinkhorn, went futher

Their study - which claims to show major sex differences in IQ - is simple, utter hogwash.

Blinkhorn said the pair were ignoring a vast body of work that had found no differences. In particular, Blinkhorn said

they chose to ignore a massive study, carried out in Mexico, which showed there was very little difference in the IQs of men and women. They say it is "an outlier" in data terms --in other words, it was a statistical freak,'. It was nothing of the kind. It was just plain inconvenient. Had it been included, as it should have been, it would have removed a huge chunk of the differences they claim to have observed.

He goes on to say

Psychologists often carry out studies that find no differences between men's and women's IQs but don't publish them for the simple reason that finding nothing seems uninteresting. But you have to take these studies into account as well as those studies that do find differences. But Lynn and Irwing did not. That also skewed their results.

Blinkhorn also accuses the pair of adopting a variety of statistical manoeuvres that he describes, in his paper, as being 'flawed and suspect'. Last week Irwing defended the study saying

The study they had done also has to be seen in context of our other work which has shown significant sex differences in IQ. Nor is it true that we played about with our data.

Blinkhorn is unrepentant and states in Nature.

Sex differences in average IQ, if they exist at all, are too small to be interesting.

The Guardian article finishes by highlighting other recent rows that have erupted over papers in leading journals.

- In 1998, Andrew Wakefield caused a furore when he wrote an article in the Lancet claiming a link between autism and the MMR vaccine. The paper led to a boycott of the vaccine by many parents in the UK, although scientists have been unable to establish any of his claims. Critics attacked the Lancet for publishing the paper.

- The Lancet journal was also criticised by Nobel laureate Aaron Klug for printing a paper claiming the immune systems of rats were damaged after they were fed genetically modified potatoes. The claims have never been substantiated.

- In contrast, last year's Nature paper, in which scientists revealed they had found remains of a race of tiny apemen, Homo floresiensis, has survived scrutiny despite claims that the fossils really belonged to deformed Homo sapiens. Research has since confirmed the original paper's results.

Perhaps not too surprisingly, the more female orientated Daily Mail highlights a competing survey. This time 1,500 women were surveyed by Perfectil, which makes health supplements. Highlighted results include:

- Women are taking the upper hand in relationships and growing in confidence about their looks.

- Almost two-thirds say they would ask a man to marry them rather than wait for him to pop the question, and one in three say they are comfortable chatting men up.

- As for sexual attraction, women are quick to know what they want. Twelve per cent say they know within ten seconds of starting a conversation with a man whether or not they fancy him. The majority make up their minds within five minutes.

- It found that women are becoming less and less bothered by wrinkles. Two in five want to age gracefully.

- Asked why they thought men were becoming obsessed with their looks, 88 per cent of the women said it was because men know they cannot be complacent as their wives or girlfriends may lose interest in them.

Questions

- Does the fact that Perfectil make health supplements influence your opinion of their survey's results? What additional information might you ask for to be sure their results are not biased?

Submitted by John Gavin.

Statistical Prediction Rules

In "Epistemology and the Psychology of Human Judgement" (2005) Oxford University Press, Michael Bishop and J.D Trout argue that we need a new tent for various mathematical, computational, philosophical and psychological results called "Ameliorative Psychology" whose task would be to help people in their everyday reasoning by teaching them some simple-to-use statistical prediction rules (SPRs) that are demonstrably better than relying upon non-statistical rules (e.g., personal and clinical intuition).

It is argued that the identification and justification of statistical prediction rules is the proper task of epistimology and would make the discipline practically relevant to the overall goals of "Amelioriative Psychology". Bishop and Trout see SPR's as a strategic route by which the discipline of (applied) epistemology might become more practically relevant to improving the success of everyday reasoning.

Marital Happiness SPR

The Marital Happiness SPR is "a low-cost reasoning strategy for predicting marital happiness. Take the couple's rate of love making and subtract from it their rate of fighting. If the couple makes love more than they fight, then they will probably report being happy; if they fight more often then they make love, then they will probably report being unhappy." (p. 30)

The procedural version of this SPR might look like this:

if ((#L - #F) >= 0) report Happy else report UnHappy

There is apparently good empirical evidence for this rule and one might therefore argue that teaching this rule to the general public might be a worthy goal of "Ameloriative Psychology" - it would offer reliable and simple-to-use quantitaive guidance when reasoning about the causes and correlates of marital happiness.

SPRs are successful in the long run

Statistical prediction rules are useful for the other girl/guy. We often feel that 1) our clinical judgement in a particular situation is very good or 2) the present case is different in some important respect that obviates the need for a statistical prediction rule to reason about this particular instance. Bishop and Trout caution about overconfidence bias in our abilities to predict the future better than an SPR. Also, the occasional and convenient use of an SPR renders the assumptions of its use invalid.

What Paul Meehl hath wrought upon us

The authors frequently cite the work of Paul Meehl. Paul Meehl, who died in 2003, was a clinical psychology methodologist who long advocated the use of statistical prediction rules in clinical reasoning instead of relying on "clinical intuition". His ground breaking research in the 50's showed that SPR's generally out-performed clinicical psychologists in diagnostic decision making.

Meelh, P. 1954. Clinical versus Statististical Prediction: A Theoretical Analysis and a Review of the Evidence. Minneapolis: University of Minnesota Press.

Bishop and Trout's work can be viewed as generalizing Meehl's epistemic/methodological conclusions and recommendations beyond the domain of clinical psychology to the task of using SPRs to improve everyday reasoning more generally.

Further properties of SPRs

Applied epistemologists should not be concerned with helping to formulate and teach SPRs for solving trivial problems. Users should be able to readily use SPRs for solving significant everyday problems. Recommended SPRs should be fairly robust in the face of small differences in the nature of the problem. An SPR should be more reliable than other methods of prediction for that domain; if not, it should be more feasible to use than a potentially superior method of prediction. This cost/benefit approach to indentifying, teaching, and using SPRs is called "Strategic Reliabilism" and is the foundational epistemological theory Bishop and Trout use to justify their recommendations.