Chance News 30: Difference between revisions

No edit summary |

No edit summary |

||

| Line 251: | Line 251: | ||

Submitted by John Gavin. | Submitted by John Gavin. | ||

== Is poker predomately a game of skill or luck revisted== | == Is poker predomately a game of skill or luck? revisted== | ||

In [http://chance.dartmouth.edu/chancewiki/index.php/Chance_News_29 Chance News 29] we discussed the | In [http://chance.dartmouth.edu/chancewiki/index.php/Chance_News_29 Chance News 29] we discussed the work of Ryne Sherman to profide a way to quantify skill in poker and in other games. Sherman's papers were published in the Internet magazine Two + Two Vol.\ 3, No. 5 and 6 but are no longer available since this journal only makes its articles available for three months. Ryne has given us permission to put his articles [http://www.dartmouth.edu/~chance/forwiki/Sherman1 A Conceptualization and Quantification of Skill] and [http://www.dartmouth.edu/~chance/forwiki/Sherman2 More on Skill and Individual Îifferences]on the Chance website and we have done so. Just click on the titles to obtain pdf versions of his papers. | ||

We have also revised our discussion in Chance News 29 to better explain Ryne's method as applied to poker. In his second article Ryne gives tables showing the result of applying his method to a wide variaty of games. | |||

Revision as of 14:45, 30 October 2007

Quotation

Keep probability in view.

Fable XVIII

Forsooth

The following Forsooth was suggested by John Vokey.

Vitamin D can lower risk of death by 7 percent

Martin Mitttelstaedt

Globe and Mail

September 11, 2007

This is an interesting example. In the article we read "people who were given a vitamin D supplement had a 7-per-cent lower risk of premature death than those who were not." and "It appears to be a life extender". So perhaps many of our forsooths come from the fact that copy editors write the headlines. (Laurie Snell)

The following Forsooth was suggested by Gregory Kohs.

The [Philadelphia] Eagles’ problems in the red zone finally appeared to have been fixed when Donovan McNabb connected with tight end Matt Schobel for a 13-yard score with 4:57 left. It was the Eagles’ first touchdown in four trips inside the 20 during yesterday’s game. Entering the contest, the Eagles had scored just five touchdowns in 16 possessions in the red zone.

Note that prior to the game against the Bears, the Eagles had a 31.25% touchdown rate inside the 20-yard line. Note that this "problem" appeared to be solved by a game with a 25% red zone touchdown rate.

This Forsooth was suggested by Jerry Grossman

Since Jerry submitted this the Times has added the correction:

An article last Sunday about the odds of winning in the baseball playoffs misstated the probability that a team with a 40 percent chance of winning an individual game would also win a best-of-five series. The team would win the series nearly one-third of the time, not more than one-third of the time.

The article stated that:

Even if one team were so superior to its opponent as to have a 60 percent chance of winning any particular game — and such imbalances are quite rare in baseball — elemental probability theory says that the poorer team will still win at least three of five games nearly one-third of the time.

New York Times, October 14, 2007

Supplement may help treat gambling addiction

Miniapolis Star Tribune, September 12, 2007

Maura Lerner

There seems to be a never-ending supply of questionable statistical studies. Consider the recent Minneapolis Star Tribune account of September 12, 2007. A University of Minnesota researcher publishing in the September 15, 2007 issue of Biological Psychiatry treated "27 pathological gamblers for eight weeks" with an amino acid supplement, N-acetyl cysteine. "By the end, 60 percent said they had fewer urges to gamble." Of the 16 who reported a benefit, "13 remained in a follow-up study...five out the six on the supplement reported continued improvement, compared to two out of seven on a placebo." According to the researcher, "There does seem to be some effect, but you would need bigger numbers."

Here are the results of the follow-up study as seen by Minitab:

MTB > PTwo 6 5 7 2.

Test and CI for Two Proportions

Sample |

X |

N |

Sample p |

1 |

5 |

6 |

0.833333 |

2 |

2 |

7 |

0.285714 |

Difference = p (1) - p (2)

Estimate for difference: 0.547619

95% CI for difference: (0.0993797, 0.995858)

Test for difference = 0 (vs not = 0): Z = 2.39 P-Value = 0.017

Fisher's exact test: P-Value = 0.103

NOTE: The normal approximation may be inaccurate for small samples.

Discussion

1.. Assume you are a frequentist, what about statistical significance? Note the discrepancy between the exact P-Value and the P-Value using the normal approximation.

2.. Assume you are a Bayesian and thus immune to P-Value whether exact or due to a normal approximation, pick your priors and find the probability that there is a difference between the effect of the supplement and the effect of the placebo.

3.. Aside from the choice of inference procedure, frequentist or Bayesian, what other flaws do you see in this study with regard to sample size and measurement of success?

4.. Speculate as to why this study was reported in a Twin Cities newspaper and probably not elsewhere.

5.. Speculate on what might happen if the 11 who did not respond to the supplement originally were put on the follow-up study.

Submitted by Paul Alper

Excel 2007 arithmetic error

Calculation Issue Update David Gainer, September 25, 2007.

The Excel blog at Microsoft usually talks about product enhancements and future plans for development, but on September 25 had to admit an embarrassing problem with basic arithmetic in Excel 2007. A series of calculations such as 77.1*850, 20.4*3,212.5, 10.2*6,425, and 5.1*12,850 that should normally produce a value of 65,535 instead produce a result of 100,000. The result is actually stored in an acceptable binary form, but flaw occurs in the process of transforming the binary representation to a decimal form for display.

Although there are an infinite number of rational numbers, a computer can only represent a finite number of these values in its storage. For the rest, the computer has to choose a value in binary that is reasonably close. Some numbers that have very simple representations in decimal, such as 0.1 do not have an exact representation in binary. Certain fractions, such as 1/3 and 1/7 have infinite expansions in decimal notation and have to be truncated. A larger list of fractions such as 1/10 have infinite expansion in binary representation, so that creates a slight inaccuracy. This slight inaccuracy produces a product for terms like 77.1*850 that are not quite 65,535 but slightly larger or smaller.

According to the Microsoft blog, there are six binary numbers that lie between the decimal values of 65534.99999999995 and 65,535 that are not displayed properly in Excel 2007. Another six binary numbers that lie between 65,535.99999999995 and 65,536 also have problems. You can't enter these numbers directly in Excel, because Excel will round any directly entered values to 15 significant digits.

It's probably not a coincidence that these numbers are close to 2^16. These values would have long strings of consecutive 1's in the binary representation. An entry on the Wolfram Blog, Arithmetic is Hard--To Get Right, Mark Sofroniou, speculates that there is a problem with propagation of carries.

This bug only affects values close to 65,535 and 65,536 when they are displayed as a final result. Intermediate calculations that produce one of these unfortunate 12 numbers are unaffected because it is the process of converting the binary representation to a decimal form for display that is flawed.

The bug also does not appear to affect earlier versions of Excel. This error is reminiscent of the Pentium FDIV bug.

Questions

1. According to the Microsoft blog, there are 9.2*10^18 possible binary values, and only 12 of them are affected by this bug. Would it be safe to say that the probability that any individual would encounter this bug is 12 / 9.2*10^18 = 1.3*10^-18?

2. It is impossible to test every possible arithmetic calculation in a computer system. How would you design a testing system that evaluated a representative sample of possible arithmetic calculations?

Submitted by Steve Simon

Uncertainties of Life

Statistics would be unnecessary if we lived in a strictly deterministic universe. Indeed, statistics and variability go together. Except, in journalism, where despite the decades of statistical education, the notion of variability is virtually nonexistent. A recent example may be found in an AP release of September 26, 2007 entitled, "New Drug May Make Tumors Self-Destruct." The article refers to a presentation "at a meeting of the European Cancer Organization in Barcelona" and concerns a drug, "STA-4783, which has no effect on normal cells" while "causing tumor cells to self-destruct by overloading them with oxygen."

The article states that "the drug doubled the amount of time that advanced melanoma patients survived without their cancer worsening:" average of 1.8 months vs. an average of 3.7 months. Further, the mortality statistics were an average of 7.8 months vs. an average of 12 months. We know that "28 received the standard chemotherapy" and 53 received the standard chemotherapy plus STA-4783. However, not a whisper about variation in either arm of the study.

Discussion

1.. Consider the "28" and "53" in the respective arms of the study. What does that imbalance suggest regarding dropout rates and/or randomization?

2.. Assuming you are a frequentist, speculate on why there is no mention of P-Value, statistical significance or effect size.

3.. The word "average" appears many times in the article. Explain why the mean or the median would be more appropriate.

4.. Explain why a box plot would be very enlightening.

5.. The article says "the drug doubled the amount of time that advanced melanoma patients survived without their cancer worsening." Looking at the data, how impressive is this in your eyes? That is, how do you view the "practical" significance of two extra months on average?

6.. Although STA-4783 supposedly has no side effects, no mention is made of the potential cost of the drug. If it is deemed successful, speculate on what patients may have to pay for the drug.

Submitted by Paul Alper

Depressed people follow a different power law distribution

Something in the way he moves, The Economist, Sep 27th 2007.

This Economist article claims that depressed people move in a mathematically different way from other people. The paper underlying the article makes two assertions:

- The durations of periods of physical activity (while resting) follow a power law distribution.

- The resulting distributions differ between healthy and clinically depressed people, to such an extent that this test could be used to diagnose clinical depression.

Power law distributions have been previously discussed in Chance News on numerous occasions.

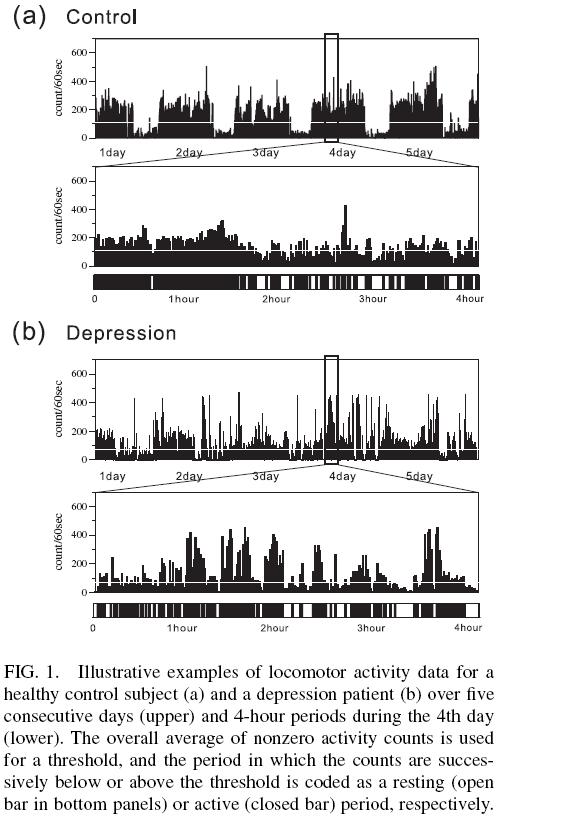

The data used to derive these conclusions consists of fourteen patients with major depressive disorder and eleven age-matched healthy control subjects. Accelerometers detected small changes in the acceleration of patients' wrists. Even slight movements of the subjects, ranging from, writing or working on a computer to physical exercise, are registered every minute for several days. The resulting data is a count per minute of the number of times the accelerometer registered a movement.

The attached figure, taken from the original paper, plots the raw count data over time for five days. The authors claim that these figures

show a clear circadian rest-activity cycle in the control subject, while in the patient, such a rhythmic pattern is much disrupted, reflecting the reported chronobiological abnormality in depression

In other words, the results highlight that there is a difference in the way that the healthy and the depressed spread their resting periods over the day: depressed patient shows bursts in the activity counts; control subjects are characterized by more sustained activity levels. This basic result confirmed a known feature of depressed people:

The normal daily rhythm that would lead to a high, steady number of counts during daylight hours and low counts during the night was replaced by occasional bursts of activity.

To aggregate the data over time, the authors estimated the cumulative distribution of durations of both resting periods. A resting (active) period's duration is defined as the total time that the count per minute remains below (above) some chosen threshold.

The Economist claims that the surprising result in the paper is that plotting the lengths of low-activity periods against their frequency produces strikingly different patterns in healthy verses depressed people. It goes on to speculate that this discovery may provide another way of diagnosing major depression.

The power-law results were only applicable for periods when patients and controls were resting. But the authors claim that results are consistent for varying choices of the threshold. In contrast, during active periods the cumulative distribution takes a 'stretched exponential form'.

The authors claim:

Surprisingly, these statistical laws, after being rescaled by the mean waiting times, are not affected by altering the threshold value to calculate the waiting times. They share the distribution form for individuals considerably different in their daily living, and regardless of whether healthy or suffering from major depression, therefore suggesting the presence of universal laws governing human behavioral organization.

The Economist summarises a corollary conjectured by the authors:

(this result) also raises an interesting question about the nature of depression itself. That is because, when he looked for similar power-law curves in other areas, the one which he thought most resembled that exhibited by the depressed turned out to be the pattern of electrical activity shown by nerve cells isolated in a Petri dish and unable to contact their neighbours.

It is both unnerving and intriguing that a mental disorder which isolates people from human society, and which must surely have its origins in some malfunction of the nerve cells, is reflected in the behaviour of cells that have themselves been isolated. Maybe this is just a coincidence but maybe it is not.

Questions

- All the patients wore the accelerometer (aka activity monitor aka motion logger) on the wrist of their non-dominant hand. Would you expect results to differ materially if the other hand, or a leg, was used?

- The fourteen depressed patients wore their accelerometers for at least 18 days each, rising to 42 days in some cases. All eleven healthy controls wore their accelerometers for seven days. Does it matter that the time periods are much longer for the patients relative to the controls?

- If so, how might a statistical analysis adjust for this?

- Would it matter if the data from each patient was not taken from one continuous time period?

- Are their enough patients in this experiment? Patients and controls were age-matched, why do you think that this was necessary? On the other hand, the sex of patients and controls is not mentioned, do you care?

- The severity of depression of the patients was mild to moderate. Should the results have accounted for the degree of severity of the clinically depressed patients?

- Can you map out in your mind how you might build a statistical model to do this? The objective might be a diagnostic tool for clinical depression, in which case, your model would have to be capable of distinguishing between mild (non-clinical) and chronic depression. Is the preliminary result outlined in this article sufficient to justify the time/expense of this extra work, in your view?

- Do you think that the severity of a person's movements, rather than just the count of such movements per minute, is more informative? Speculate why you think this type of measurement was not recorded?

- The data consists of the number of accelerometer counts per minute. Minute periods for which the count was zero were excluded from the analysis. Can you guess why the authors did this? Might it affect the results in some way?

- The maximum number of counts that the accelerometer can count in one minute is 300. Is this relevant in any context?

- Do you know what a 'stretched exponential distribution or a complementary cumulative distribution function is? Can you relate the former to a more commonly known distribution in statistics and do you know a more common term to describe the latter distribution?

- The authors say the Kolmogorov-Smirnov test is used throughout. What other tests might you like to see to distinguish patients from controls?

- Do any of your tests account for the time order in which the data is recorded? If not, why not?

- The authors report a p-value of 0.0001 for the different scaling exponents in the two fitted power law distributions. Does the low p-value surprise you? How does it relate to the number of people in the sample? The authors mention that this p-value is associated with a significantly longer (p < 0.001) mean resting-period duration in the patients (15 minutes) than in the control subjects (7 minutes). How might the number of patients and controls affect this statistic's p-value?

- If the graph in the figure above are typical of all patients, as claimed, why would anyone bother to calculate a statistical test? Isn't it obvious that there is there is overwhelming empirical evidence from just exploring the data that the patterns in the two time series are completely different? Is there likely to be a statistical test that might show no difference between the two datasets?

- Are you comfortable with the conjecture that it might be more than coincidence that electrical activity in nerve cells also follows a power law distribution?

Further reading

- Universal Scaling Law in Human Behavioral Organization, Yoshiharu Yamamoto, et al, Univ of Tokyo, Phys. Rev. Lett. 99, (2007).

- This short four-page paper is currently available for free from the author's website.

- Trivia - While browsing links related to this article - I stumbled across a neat graphical summary of the numbers of letters sent and received by Darwin and Einstein over their lifetimes. The response times of both authors follow similar power law distributions (Source: Nature, 437, 1251).

Submitted by John Gavin.

Is poker predomately a game of skill or luck? revisted

In Chance News 29 we discussed the work of Ryne Sherman to profide a way to quantify skill in poker and in other games. Sherman's papers were published in the Internet magazine Two + Two Vol.\ 3, No. 5 and 6 but are no longer available since this journal only makes its articles available for three months. Ryne has given us permission to put his articles A Conceptualization and Quantification of Skill and More on Skill and Individual Îifferenceson the Chance website and we have done so. Just click on the titles to obtain pdf versions of his papers.

We have also revised our discussion in Chance News 29 to better explain Ryne's method as applied to poker. In his second article Ryne gives tables showing the result of applying his method to a wide variaty of games.