Chance News 87: Difference between revisions

m (→Questions) |

No edit summary |

||

| Line 108: | Line 108: | ||

Submitted by Margaret Cibes | Submitted by Margaret Cibes | ||

==Blue dot technique== | |||

In the last edition of Chance News, we reported on the [http://test.causeweb.org/wiki/chance/index.php/Chance_News_86#How_to_prove_anything_with_statistics the fraudulent data case] involving Dutch researcher Dirk Smeesters. More on the case can be found in this | |||

[http://news.sciencemag.org/scienceinsider/2012/06/rotterdam-marketing-psychologist.html?ref=hp ''Science''Insider story] (26 June 2012), which references | |||

[http://www.eur.nl/fileadmin/ASSETS/press/2012/Juli/report_Committee_for_inquiry_prof._Smeesters.publicversion.28_6_2012.pdf the Erasmus University report] for the following: | |||

<blockquote> | |||

Smeesters conceded to employing the so-called "blue-dot technique," in which subjects who have apparently not read study instructions carefully are identified and excluded from analysis if it helps bolster the outcome. According to the report, Smeesters said this type of massaging was nothing out of the ordinary. He "repeatedly indicates that the culture in his field and his department is such that he does not feel personally responsible, and is convinced that in the area of marketing and (to a lesser extent) social psychology, many consciously leave out data to reach significance without saying so." | |||

</blockquote> | |||

But, what in the world is "blue-dot technique"? An answer can be found in this [http://bps-research-digest.blogspot.com/2012/07/has-psychologist-been-condemned-for.html ''Research Digest'' post] (a blog from the British Psychological Society), where a comment by Richard Gill at Leiden University (Same Prof. Gill as in Chance News 86) explains that | |||

<blockquote> | |||

The blue dot test is that there's a blue dot somewhere in the form which your respondents have to fill in, and one of the last questions is "and did you see the blue dot"? Those who didn't see it apparently didn't read the instructions carefully. Seems to me fine to have such a question and routinely, in advance, remove all respondents who gave the wrong answer to this question. The question is whether Smeesters only used the blue dot test as an excuse to remove some of the respondents, and only used it after an initial analysis gave results which were decent but in need of further "sexing up" as he called it. | |||

</blockquote> | |||

Submitted by Paul Alper | |||

Revision as of 20:46, 20 July 2012

Quotations

From The Theory That Would Not Die, 2011:

“There has not been a single date in the history of the law of gravitation when a modern significance test would not have rejected all laws [about gravitation] and left us with no law.”

“No one has ever claimed that statistics was the queen of the sciences…. The best alternative that has occurred to me is ‘bedfellow.’ Statistics – bedfellow of the sciences – may not be the banner under which we would choose to march in the next academic procession, but it is as close to the mark as I can come.”

When asked how to differentiate one Bayesian from another, a biostatician cracked, “Ye shall know them by their posteriors.”

Submitted by Margaret Cibes

Forsooth

MHC dating and mating

Immunology is a branch of biomedical science that covers the study of all aspects of the immune system in all organisms.[1] It deals with the physiological functioning of the immune system in states of both health and diseases; malfunctions of the immune system in immunological disorders (autoimmune diseases, hypersensitivities, immune deficiency, transplant rejection); the physical, chemical and physiological characteristics of the components of the immune system in vitro, in situ, and in vivo. Immunology has applications in several disciplines of science, and as such is further divided.

Within one of those divisions is the study of MHC, the major histocompatibility complex. From Chance News 39: MHC which referenced Wikipedia,

The major histocompatibility complex (MHC) is a large genomic region or gene family found in most vertebrates. It is the most gene-dense region of the mammalian genome and plays an important role in the immune system, autoimmunity, and reproductive success.

It has been suggested that MHC plays a role in the selection of potential mates, via olfaction. MHC genes make molecules that enable the immune system to recognise invaders; generally, the more diverse the MHC genes of the parents, the stronger the immune system of the offspring. It would obviously be beneficial, therefore, to have evolved systems of recognizing individuals with different MHC genes and preferentially selecting them to breed with.

It has been further proposed that despite humans having a poor sense of smell compared to other organisms, the strength and pleasantness of sweat can influence mate selection. Statistically proving all of this--that is, by means of olfaction we somehow sense and select mates who are different in MHC-- via a convincing clinical trial is a challenge. Nevertheless, several things are in its favor. For one, unlike a medical trial, it is quite inexpensive to have subjects smelling a series of odoriferous T-shirts of contributors. For another, the lay media are sure to publicize the study with lame and juvenile headlines. The previously mentioned Chance News post describes several clinical trials and lay media reaction.

The latest manifestation of the linkage between MHC and mate selection is illustrated by this newspaper article which appeared throughout the country. Pheromone parties would appear to be the next big thing, replacing online dating. The only thing needed to make a match is a smelly T-shirt in a freezer bag. The article refers to Prof. Martha McClintock, founder of the Institute for Mind and Biology at the University of Chicago. "Humans can pick up this incredibly small chemical difference with their noses. It is like an initial screen.”

Discussion

1. MHC in humans is often called HLA, human leukocyte antigen.

2. An expert in the field informed Chance News that the cost of MHC serological typing is about $100 per person but is “not as accurate as molecular methods.” Previous T-shirt studies mentioned in Chance News involved about 100 people, thus an outlay of about $10,000.

3. The expert referred to in #2 says he serologically typed his former girlfriends and, as is often alleged, indeed, each was MHC dissimilar to him. No T-shirt was involved. He is “convinced it [olfaction] is a real biological phenomenon” because “it makes sense” and “the large amount of non-human data that supports it.” However, he further adds,

I would not recommend HLA testing for couples, because there are too many other sociological and physical variable that play into human ideas of attractiveness and mate preference. And from a strictly biological advantage standpoint, it doesn’t really matter anymore (see below).

The alleles that provide a selective advantage for pathogen protection are presumably the alleles that are most common, especially when maintained as a haplotype, which is the A-B-C-DR complex on one chromosome. For instance, A1, B8, DR17 haplotype is found in approx. 5% of Caucasians. But now that medical science allows for survival from infections via antimicrobics (antibiotics), there really is no such thing as immunologic evolution, because an infection that would have killed someone in childhood without the appropriate HLA 100 years ago can be saved, and go on to breed and pass the genes to their offspring. Science has, in essence, negated the Darwinian evolution process, particularly when it comes to the immune system.

4. Chance News also contacted several research immunologists to obtain their opinion on the T-shirt phenomenon. One had never heard of it and the other was very skeptical.

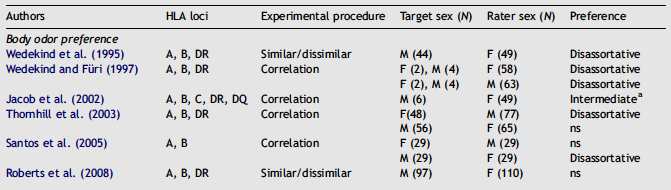

5. The following table of T-shirt smelling is taken from a review article by Roberts where “ns” stands for not [statistically] significant.

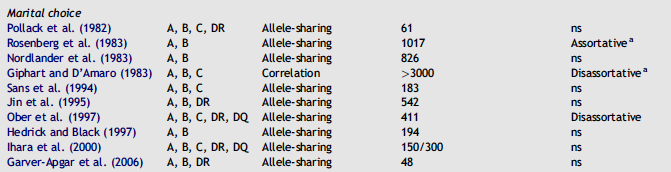

The following table regarding marital choice is from the same article. How does this compare and contrast with the T-shirt table above?

6. A study not mentioned in the above table regarding marital (mating) choice is Is Mate Choice in Humans MHC-Dependent?.

In this study, we tested the existence of MHC-disassortative mating in humans by directly measuring the genetic similarity at the MHC level between spouses. These data were extracted from the HapMap II dataset, which includes 30 European American couples from Utah and 30 African couples from the Yoruba population in Nigeria.

For the 30 African couples, the authors conclude

African spouses show no significant pattern of similarity/dissimilarity across the MHC region (relatedness coefficient, R = 0.015, p = 0.23), whereas across the genome, they are more similar than random pairs of individuals (genome-wide R = 0.00185, p<10−3).

For the 30 Utah couples, the authors conclude

On the other hand, the sampled European American couples are significantly more MHC-dissimilar than random pairs of individuals (R = −0.043, p = 0.015), and this pattern of dissimilarity is extreme when compared to the rest of the genome, both globally (genome-wide R= −0.00016, p = 0.739) and when broken into windows having the same length and recombination rate as the MHC (only nine genomic regions exhibit a higher level of genetic dissimilarity between spouses than does the MHC). This study thus supports the hypothesis that the MHC influences mate choice in some human populations.

Comment on the last sentence especially with regard to the size of the sample(s) and the strength of the conclusion.

7. Even if you are not of Yoruba ancestry nor reside in Utah, ask your parents if they are willing to have a serological MHC test to determine if they contributed to your genetic wellbeing.

8. Chance News was unable to find any MHC studies, T-shirt or otherwise, regarding the reproductive strategy or mate selection for homosexuals.

Submitted by Paul Alper

Grading on the curve

“Microsoft’s Lost Decade,” Vanity Fair, August 2012

(Preview here; access to full article requires subscription)

This article is an extensive critique of Bill Gates’ successor at Microsoft. One alleged management problem was related to the staff performance evaluation system.

At the center of the cultural problems was a management system called “stack ranking.” …. The system – also referred to as “the performance model,” “the bell curve,” or just “the employee review” – has, with certain variations over the years, worked like this: every unit was forced to declare a certain percentage of employees as top performers, then good performers, then average, then below average, then poor.

“If you were on a team of 10 people, you walked in the first day knowing that, no matter how good everyone was, two people were going to get a great review, seven were going to get mediocre reviews, and one was going to get a terrible review,” said a former software developer. ….

Supposing Microsoft had managed to hire technology’s top players into a single unit before they made their names elsewhere – Steve Jobs of Apple, Mark Zuckerberg of Facebook, Larry Page of Google, Larry Ellison of Oracle, and Jeff Bezos of Amazon – regardless of performance, under one of the iterations of stack ranking, two of them would have to be rated as below average, with one deemed disastrous.

Discussion

The stack ranking system is said to have sometimes been referred to as a “bell curve.” If a system were supposed to follow a bell, or normal, curve, would you have agreed with any of the outcomes described for a team of 10 people? For a team of the for the 5 “top players”? Why or why not?

Submitted by Margaret Cibes

Big, brief bang from new stadiums

“Do new stadiums really bring the crowds flocking”

by Mike Aylott, Significance web exclusive, April 11, 2012

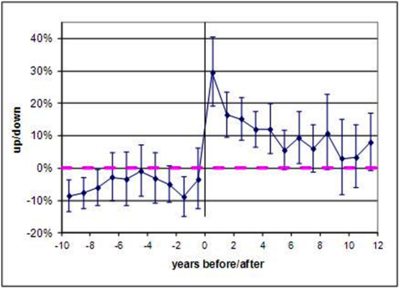

The author has studied how long the boom of a new stadium lasts, by considering all 30 relocating British soccer clubs up until 2011, and their average home league attendance for all ten seasons before and after relocation.

[T]he most important three factors have been accounted for in this analysis: a) the division the club is playing in that season, b) what position in this division the club finishes, and c) the overall average attendance in this division for this season.

See the graph below for the percent change in average attendance after adjusting for the three factors. The author notes that the pink dotted line refers to non-relocating clubs and that the error bars denote ±2 standard errors.

Submitted by Margaret Cibes

Communicating chances

“Safer gambling”, by Tristan Barnett, Significance web exclusive, April 4, 2012

In [Gaming Law Review and Economics] 2010 I suggested, as an approach towards responsible gambling and to increase consumer protection, to amend poker machine regulations such that the probabilities associated with each payout are displayed on each machine along with information that would advise players of the chances of ending up with a certain amount of profit after playing for a certain amount of time.

Questions

What do you think of this idea? Do you think it would encourage “responsible gambling”?

Submitted by Margaret Cibes

Blue dot technique

In the last edition of Chance News, we reported on the the fraudulent data case involving Dutch researcher Dirk Smeesters. More on the case can be found in this ScienceInsider story (26 June 2012), which references the Erasmus University report for the following:

Smeesters conceded to employing the so-called "blue-dot technique," in which subjects who have apparently not read study instructions carefully are identified and excluded from analysis if it helps bolster the outcome. According to the report, Smeesters said this type of massaging was nothing out of the ordinary. He "repeatedly indicates that the culture in his field and his department is such that he does not feel personally responsible, and is convinced that in the area of marketing and (to a lesser extent) social psychology, many consciously leave out data to reach significance without saying so."

But, what in the world is "blue-dot technique"? An answer can be found in this Research Digest post (a blog from the British Psychological Society), where a comment by Richard Gill at Leiden University (Same Prof. Gill as in Chance News 86) explains that

The blue dot test is that there's a blue dot somewhere in the form which your respondents have to fill in, and one of the last questions is "and did you see the blue dot"? Those who didn't see it apparently didn't read the instructions carefully. Seems to me fine to have such a question and routinely, in advance, remove all respondents who gave the wrong answer to this question. The question is whether Smeesters only used the blue dot test as an excuse to remove some of the respondents, and only used it after an initial analysis gave results which were decent but in need of further "sexing up" as he called it.

Submitted by Paul Alper